Collaborative Self-Attention for Recommender Systems

Paper and Code

May 27, 2019

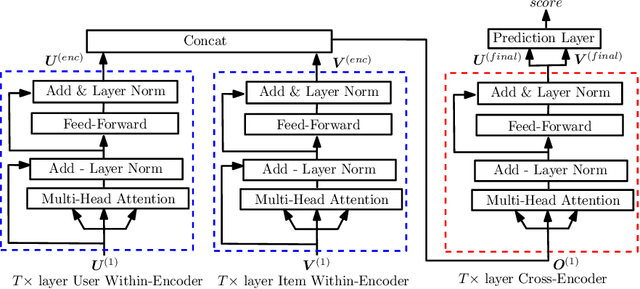

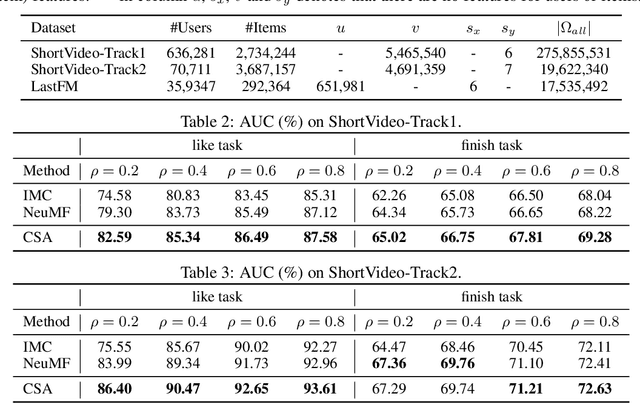

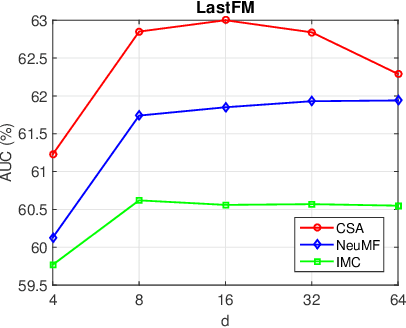

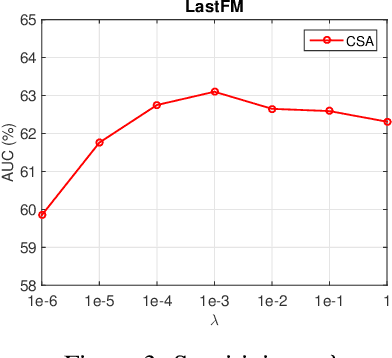

Recommender systems (RS), which have been an essential part in a wide range of applications, can be formulated as a matrix completion (MC) problem. To boost the performance of MC, matrix completion with side information, called inductive matrix completion (IMC), was further proposed. In real applications, the factorized version of IMC is more favored due to its efficiency of optimization and implementation. Regarding the factorized version, traditional IMC method can be interpreted as learning an individual representation for each feature, which is independent from each other. Moreover, representations for the same features are shared across all users/items. However, the independent characteristic for features and shared characteristic for the same features across all users/items may limit the expressiveness of the model. The limitation also exists in variants of IMC, such as deep learning based IMC models. To break the limitation, we generalize recent advances of self-attention mechanism to IMC and propose a context-aware model called collaborative self-attention (CSA), which can jointly learn context-aware representations for features and perform inductive matrix completion process. Extensive experiments on three large-scale datasets from real RS applications demonstrate effectiveness of CSA.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge