Collaborative Multidisciplinary Design Optimization with Neural Networks

Paper and Code

Jun 11, 2021

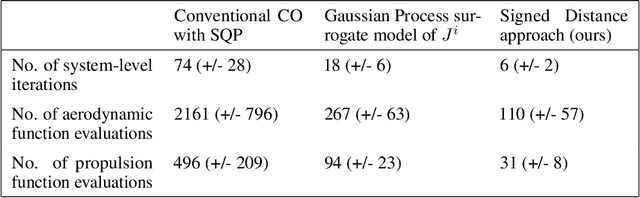

The design of complex engineering systems leads to solving very large optimization problems involving different disciplines. Strategies allowing disciplines to optimize in parallel by providing sub-objectives and splitting the problem into smaller parts, such as Collaborative Optimization, are promising solutions.However, most of them have slow convergence which reduces their practical use. Earlier efforts to fasten convergence by learning surrogate models have not yet succeeded at sufficiently improving the competitiveness of these strategies.This paper shows that, in the case of Collaborative Optimization, faster and more reliable convergence can be obtained by solving an interesting instance of binary classification: on top of the target label, the training data of one of the two classes contains the distance to the decision boundary and its derivative. Leveraging this information, we propose to train a neural network with an asymmetric loss function, a structure that guarantees Lipshitz continuity, and a regularization towards respecting basic distance function properties. The approach is demonstrated on a toy learning example, and then applied to a multidisciplinary aircraft design problem.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge