CNNs are Myopic

Paper and Code

Jun 01, 2022

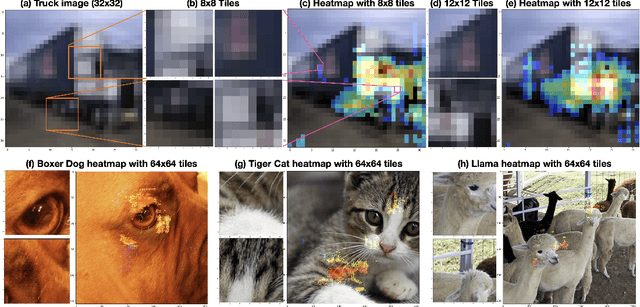

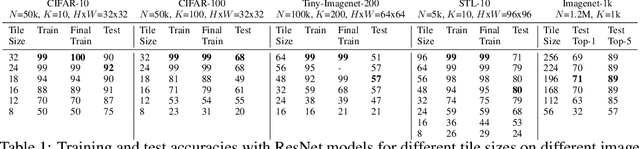

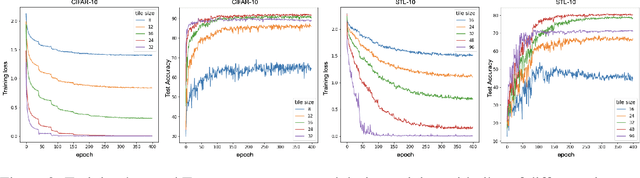

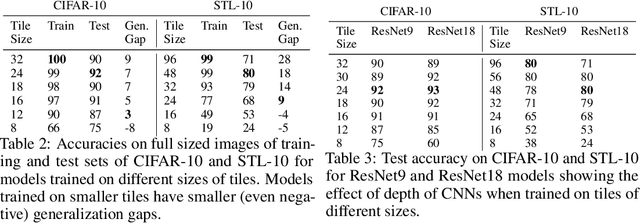

We claim that Convolutional Neural Networks (CNNs) learn to classify images using only small seemingly unrecognizable tiles. We show experimentally that CNNs trained only using such tiles can match or even surpass the performance of CNNs trained on full images. Conversely, CNNs trained on full images show similar predictions on small tiles. We also propose the first a priori theoretical model for convolutional data sets that seems to explain this behavior. This gives additional support to the long standing suspicion that CNNs do not need to understand the global structure of images to achieve state-of-the-art accuracies. Surprisingly it also suggests that over-fitting is not needed either.

* Added reference and comparison to BagNets in related work section

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge