Clinical Language Understanding Evaluation (CLUE)

Paper and Code

Sep 28, 2022

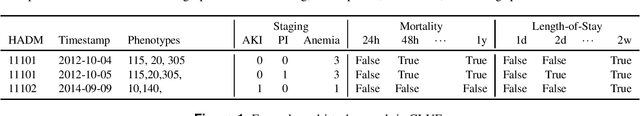

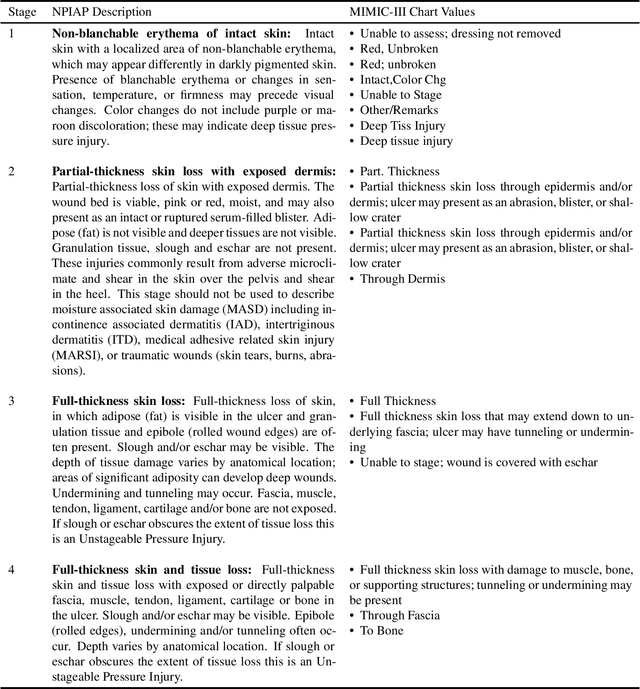

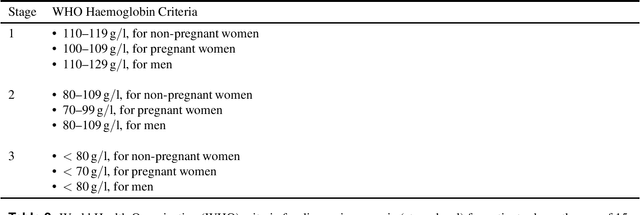

Clinical language processing has received a lot of attention in recent years, resulting in new models or methods for disease phenotyping, mortality prediction, and other tasks. Unfortunately, many of these approaches are tested under different experimental settings (e.g., data sources, training and testing splits, metrics, evaluation criteria, etc.) making it difficult to compare approaches and determine state-of-the-art. To address these issues and facilitate reproducibility and comparison, we present the Clinical Language Understanding Evaluation (CLUE) benchmark with a set of four clinical language understanding tasks, standard training, development, validation and testing sets derived from MIMIC data, as well as a software toolkit. It is our hope that these data will enable direct comparison between approaches, improve reproducibility, and reduce the barrier-to-entry for developing novel models or methods for these clinical language understanding tasks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge