Classifying Images with Few Spikes per Neuron

Paper and Code

Jan 31, 2020

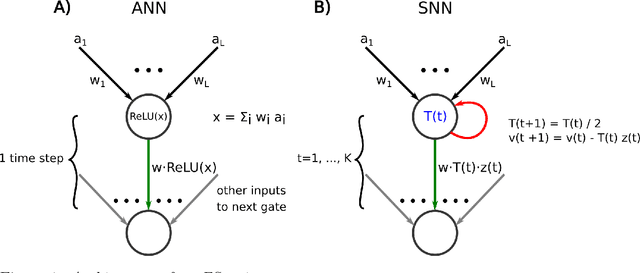

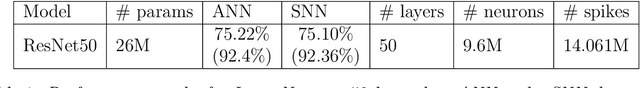

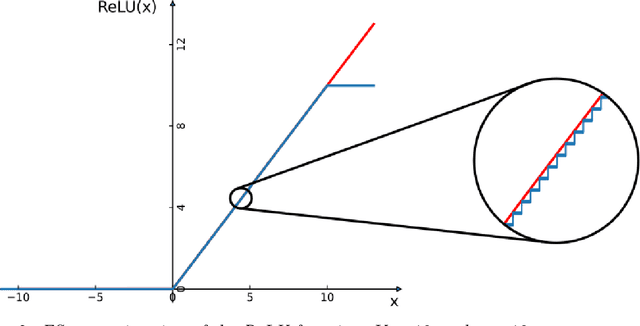

Spiking neural networks (SNNs) promise to provide AI implementations with a drastically reduced energy budget in comparison with standard artificial neural networks (ANNs). Besides recurrent SNN modules that can be efficiently trained on-chip, many AI applications require the use of feedforward convolutional neural networks (CNNs) as preprocessors for visual or other sensory inputs. The standard solution has been to train a CNN consisting of non-spiking neurons, typically using the rectified linear ReLU function as activation function, and then to translate these CNNs with ReLU neurons via rate coding into SNNs. However this produces SNNs with long latency and small throughput, since the number of spikes that a neuron has to emit is on the order of the number N of output values of the corresponding CNN gate which subsequent layers need to be able to distinguish. We introduce a new ANN-SNN conversion - called FS-conversion - that needs only log N many time steps for that, which is optimal from the perspective of information theory. This can be achieved with a simple variation of the spiking neuron model that has no membrane leak but an exponentially decreasing firing threshold. We show that for the classification of images from ImageNet and CIFAR10 this new conversion reduces latency and drastically increases the throughput compared with rate-based conversion, while achieving almost the same classification performance as the ANN.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge