Class Introspection: A Novel Technique for Detecting Unlabeled Subclasses by Leveraging Classifier Explainability Methods

Paper and Code

Jul 04, 2021

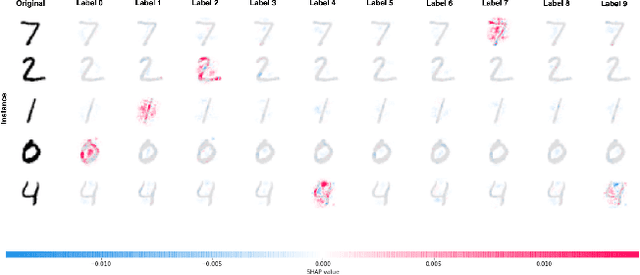

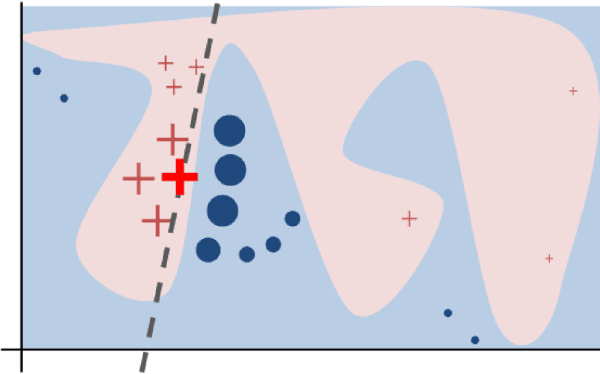

Detecting latent structure within a dataset is a crucial step in performing analysis of a dataset. However, existing state-of-the-art techniques for subclass discovery are limited: either they are limited to detecting very small numbers of outliers or they lack the statistical power to deal with complex data such as image or audio. This paper proposes a solution to this subclass discovery problem: by leveraging instance explanation methods, an existing classifier can be extended to detect latent classes via differences in the classifier's internal decisions about each instance. This works not only with simple classification techniques but also with deep neural networks, allowing for a powerful and flexible approach to detecting latent structure within datasets. Effectively, this represents a projection of the dataset into the classifier's "explanation space," and preliminary results show that this technique outperforms the baseline for the detection of latent classes even with limited processing. This paper also contains a pipeline for analyzing classifiers automatically, and a web application for interactively exploring the results from this technique.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge