Chatbots in Knowledge-Intensive Contexts: Comparing Intent and LLM-Based Systems

Paper and Code

Feb 07, 2024

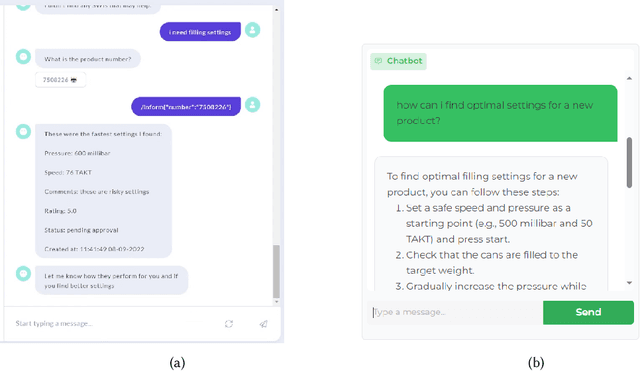

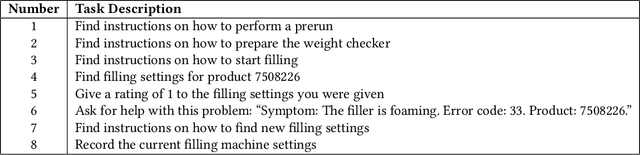

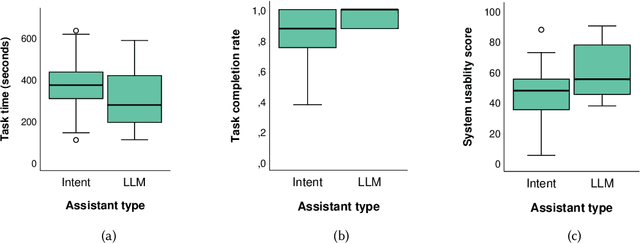

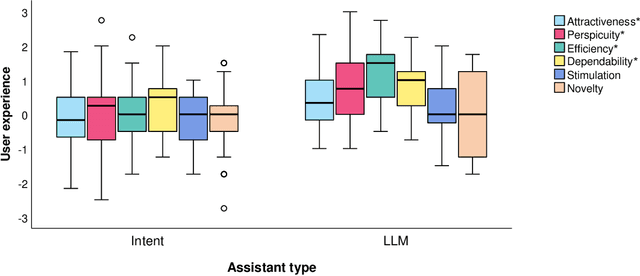

Cognitive assistants (CA) are chatbots that provide context-aware support to human workers in knowledge-intensive tasks. Traditionally, cognitive assistants respond in specific ways to predefined user intents and conversation patterns. However, this rigidness does not handle the diversity of natural language well. Recent advances in natural language processing (NLP), powering large language models (LLM) such as GPT-4, Llama2, and Gemini, could enable CAs to converse in a more flexible, human-like manner. However, the additional degrees of freedom may have unforeseen consequences, especially in knowledge-intensive contexts where accuracy is crucial. As a preliminary step to assessing the potential of using LLMs in these contexts, we conducted a user study comparing an LLM-based CA to an intent-based system regarding interaction efficiency, user experience, workload, and usability. This revealed that LLM-based CAs exhibited better user experience, task completion rate, usability, and perceived performance than intent-based systems, suggesting that switching NLP techniques should be investigated further.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge