Characterization of Real-time Haptic Feedback from Multimodal Neural Network-based Force Estimates during Teleoperation

Paper and Code

Sep 23, 2021

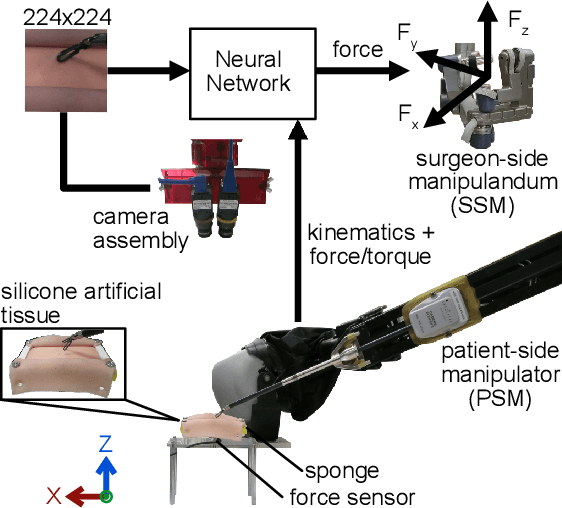

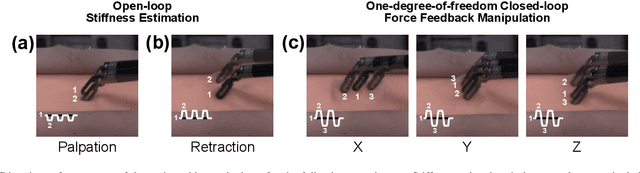

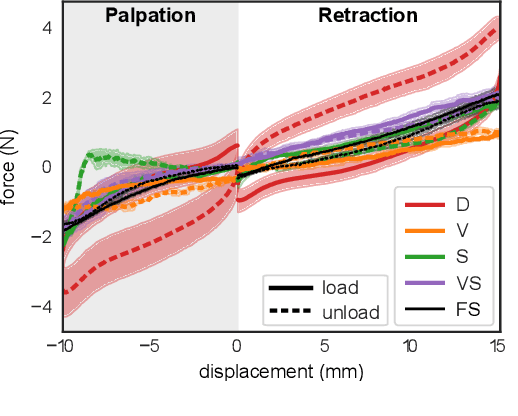

Force estimation using neural networks is a promising approach to enable haptic feedback in minimally invasive surgical robots without end-effector force sensors. Various network architectures have been proposed, but none have been tested in real-time with surgical-like manipulations. Thus, questions remain about the real-time transparency and stability of force feedback from neural network-based force estimates. We characterize the real-time impedance transparency and stability of force feedback rendered on a da Vinci Research Kit teleoperated surgical robot using neural networks with vision-only, state-only, or state and vision inputs. Networks were trained on an existing dataset of teleoperated manipulations without force feedback. We measured real-time transparency without rendered force feedback by commanding the patient-side robot to perform vertical retractions and palpations on artificial silicone tissue. To measure stability and transparency during teleoperation with force feedback to the operator, we modeled a one-degree-of-freedom human and surgeon-side manipulandum that moved the patient-side robot to perform manipulations. We found that the multimodal vision and state network displayed more transparent impedance than single-modality networks under no force feedback. State-based networks displayed instabilities during manipulation with force feedback. This instability was reduced in the multimodal network when refit with additional data collected during teleoperation with force feedback.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge