Characterization of effects of transfer learning across domains and languages

Paper and Code

Oct 03, 2022

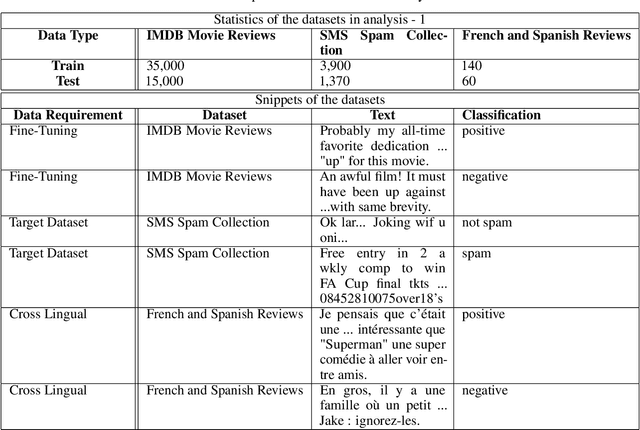

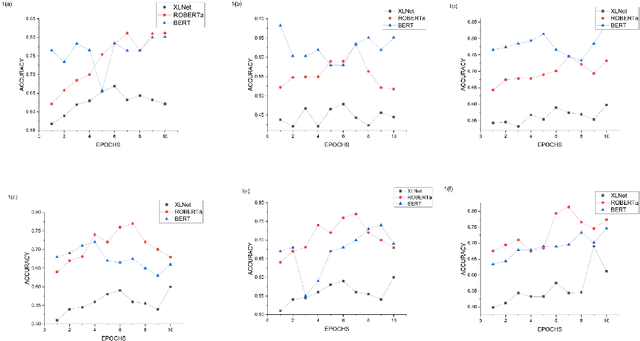

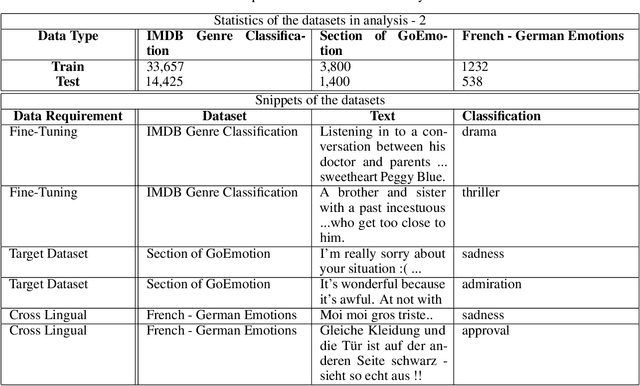

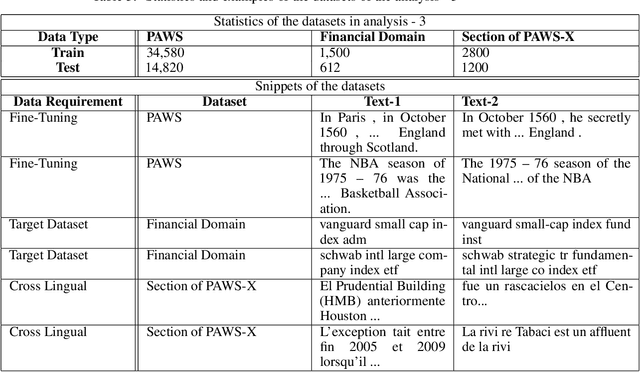

With ever-expanding datasets of domains, tasks and languages, transfer learning (TL) from pre-trained neural language models has emerged as a powerful technique over the years. Many pieces of research have shown the effectiveness of transfer learning across different domains and tasks. However, there remains uncertainty around when a transfer will lead to positive or negative impacts on performance of the model. To understand the uncertainty, we investigate how TL affects the performance of popular pre-trained models like BERT, RoBERTa and XLNet over three natural language processing (NLP) tasks. We believe this work will inform about specifics on when and what to transfer related to domain, multi-lingual dataset and various NLP tasks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge