Challenging the Black Box: A Comprehensive Evaluation of Attribution Maps of CNN Applications in Agriculture and Forestry

Paper and Code

Feb 18, 2024

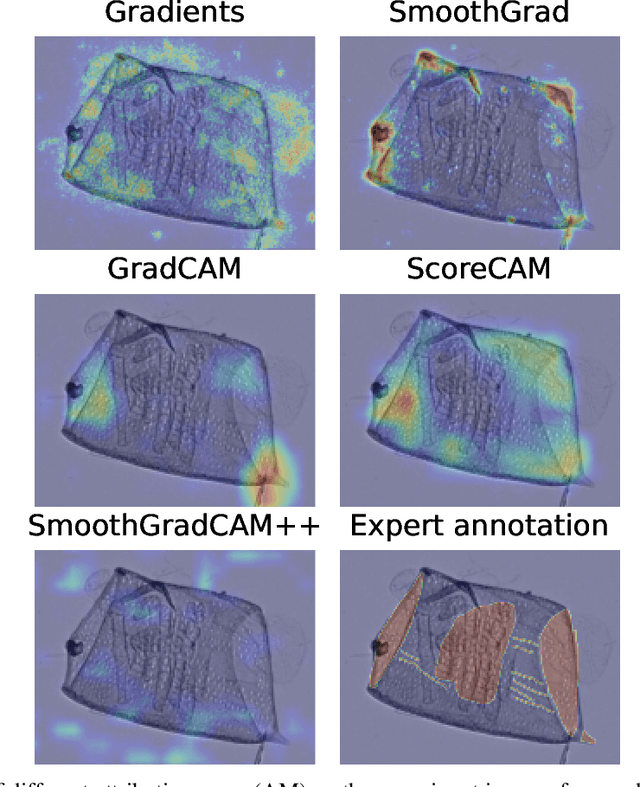

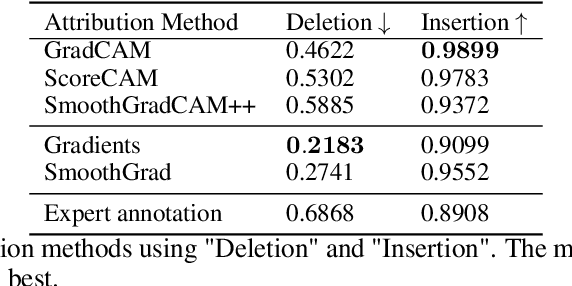

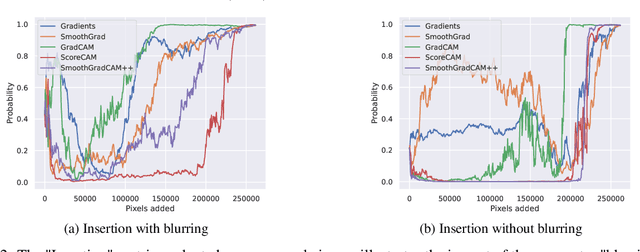

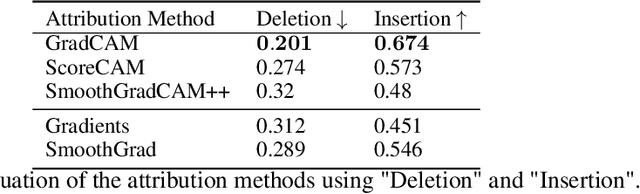

In this study, we explore the explainability of neural networks in agriculture and forestry, specifically in fertilizer treatment classification and wood identification. The opaque nature of these models, often considered 'black boxes', is addressed through an extensive evaluation of state-of-the-art Attribution Maps (AMs), also known as class activation maps (CAMs) or saliency maps. Our comprehensive qualitative and quantitative analysis of these AMs uncovers critical practical limitations. Findings reveal that AMs frequently fail to consistently highlight crucial features and often misalign with the features considered important by domain experts. These discrepancies raise substantial questions about the utility of AMs in understanding the decision-making process of neural networks. Our study provides critical insights into the trustworthiness and practicality of AMs within the agriculture and forestry sectors, thus facilitating a better understanding of neural networks in these application areas.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge