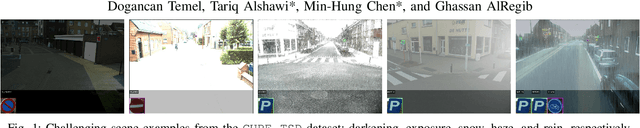

Challenging Environments for Traffic Sign Detection: Reliability Assessment under Inclement Conditions

Paper and Code

Feb 19, 2019

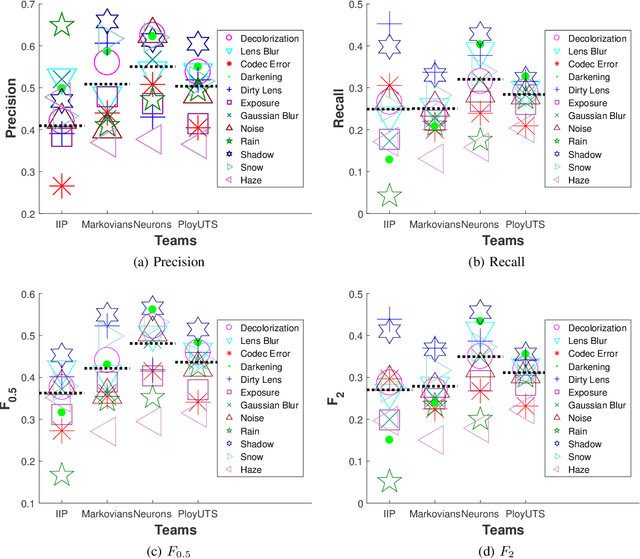

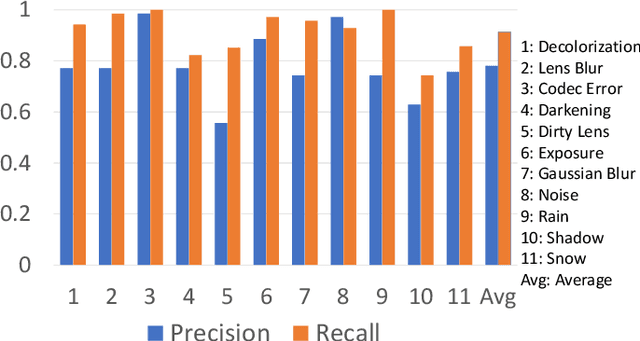

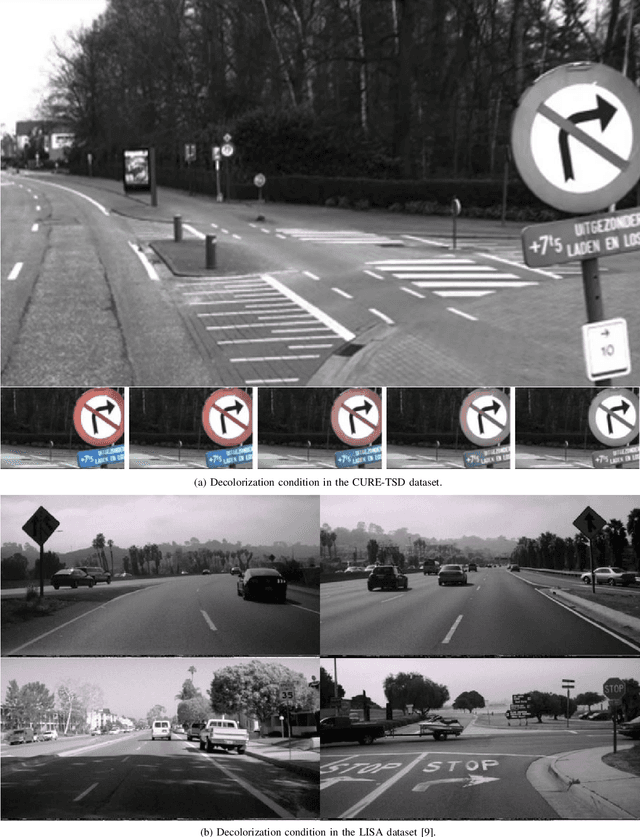

State-of-the-art algorithms successfully localize and recognize traffic signs over existing datasets, which are limited in terms of challenging condition type and severity. Therefore, it is not possible to estimate the performance of traffic sign detection algorithms under overlooked challenging conditions. Another shortcoming of existing datasets is the limited utilization of temporal information and the unavailability of consecutive frames and annotations. To overcome these shortcomings, we generated the CURE-TSD video dataset and hosted the first IEEE Video and Image Processing (VIP) Cup within the IEEE Signal Processing Society. In this paper, we provide a detailed description of the CURE-TSD dataset, analyze the characteristics of the top performing algorithms, and provide a performance benchmark. Moreover, we investigate the robustness of the benchmarked algorithms with respect to sign size, challenge type and severity. Benchmarked algorithms are based on state-of-the-art and custom convolutional neural networks that achieved a precision of 0.55 and a recall of 0.32, F0.5 score of 0.48 and F2 score of 0.35. Experimental results show that benchmarked algorithms are highly sensitive to tested challenging conditions, which result in an average performance drop of 0.17 in terms of precision and a performance drop of 0.28 in recall under severe conditions. The dataset is publicly available at https://ghassanalregib.com/curetsd/.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge