CausalKG: Causal Knowledge Graph Explainability using interventional and counterfactual reasoning

Paper and Code

Jan 06, 2022

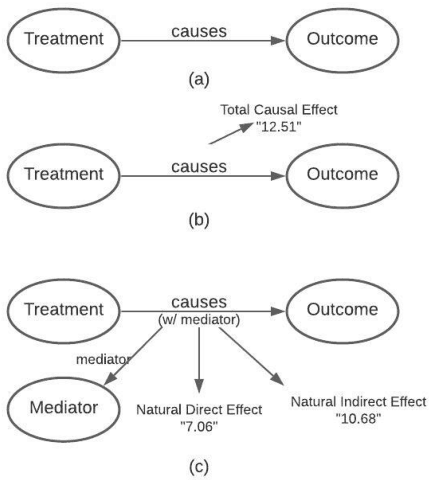

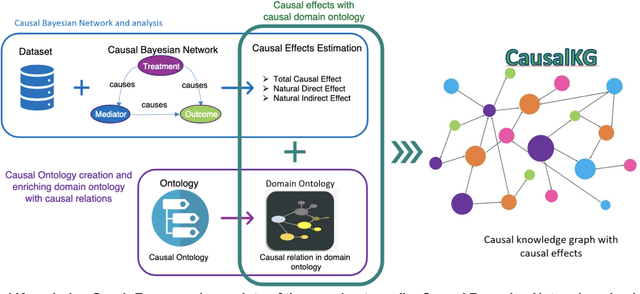

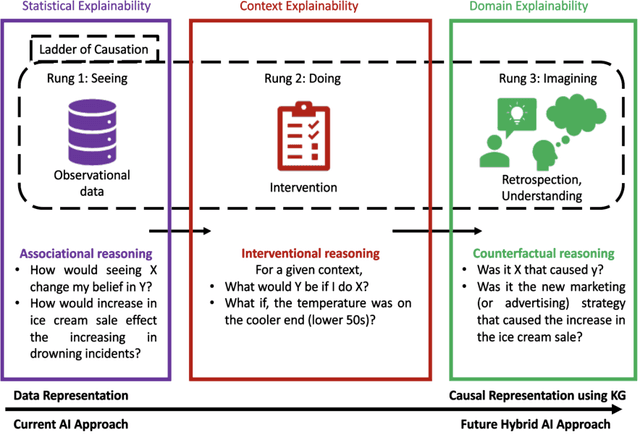

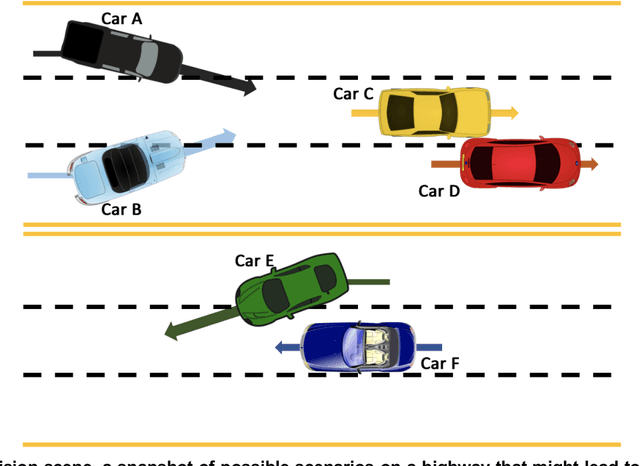

Humans use causality and hypothetical retrospection in their daily decision-making, planning, and understanding of life events. The human mind, while retrospecting a given situation, think about questions such as "What was the cause of the given situation?", "What would be the effect of my action?", or "Which action led to this effect?". It develops a causal model of the world, which learns with fewer data points, makes inferences, and contemplates counterfactual scenarios. The unseen, unknown, scenarios are known as counterfactuals. AI algorithms use a representation based on knowledge graphs (KG) to represent the concepts of time, space, and facts. A KG is a graphical data model which captures the semantic relationships between entities such as events, objects, or concepts. The existing KGs represent causal relationships extracted from texts based on linguistic patterns of noun phrases for causes and effects as in ConceptNet and WordNet. The current causality representation in KGs makes it challenging to support counterfactual reasoning. A richer representation of causality in AI systems using a KG-based approach is needed for better explainability, and support for intervention and counterfactuals reasoning, leading to improved understanding of AI systems by humans. The causality representation requires a higher representation framework to define the context, the causal information, and the causal effects. The proposed Causal Knowledge Graph (CausalKG) framework, leverages recent progress of causality and KG towards explainability. CausalKG intends to address the lack of a domain adaptable causal model and represent the complex causal relations using the hyper-relational graph representation in the KG. We show that the CausalKG's interventional and counterfactual reasoning can be used by the AI system for the domain explainability.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge