Causality Learning With Wasserstein Generative Adversarial Networks

Paper and Code

Jun 03, 2022

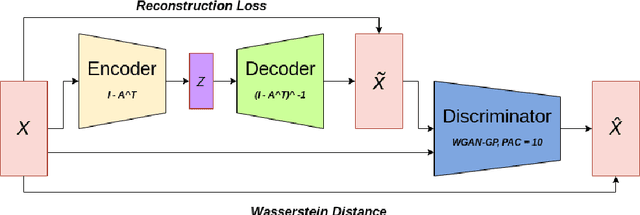

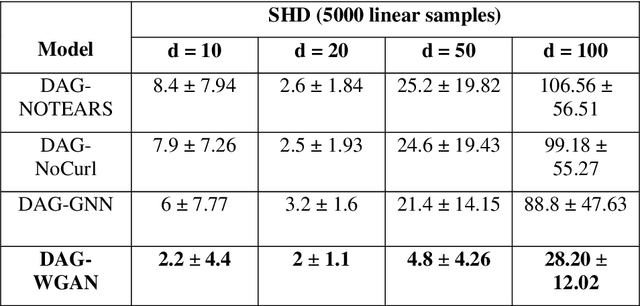

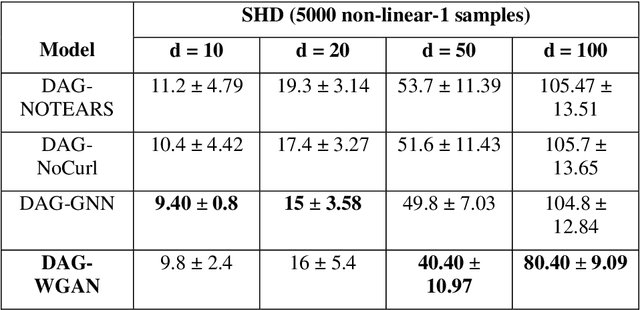

Conventional methods for causal structure learning from data face significant challenges due to combinatorial search space. Recently, the problem has been formulated into a continuous optimization framework with an acyclicity constraint to learn Directed Acyclic Graphs (DAGs). Such a framework allows the utilization of deep generative models for causal structure learning to better capture the relations between data sample distributions and DAGs. However, so far no study has experimented with the use of Wasserstein distance in the context of causal structure learning. Our model named DAG-WGAN combines the Wasserstein-based adversarial loss with an acyclicity constraint in an auto-encoder architecture. It simultaneously learns causal structures while improving its data generation capability. We compare the performance of DAG-WGAN with other models that do not involve the Wasserstein metric in order to identify its contribution to causal structure learning. Our model performs better with high cardinality data according to our experiments.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge