Can Transfer Entropy Infer Causality in Neuronal Circuits for Cognitive Processing?

Paper and Code

Jan 22, 2019

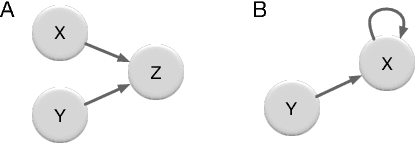

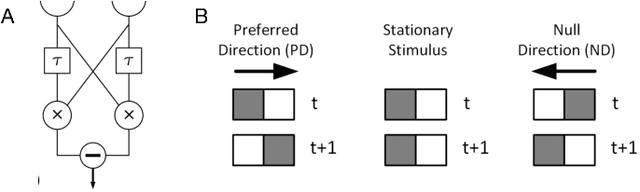

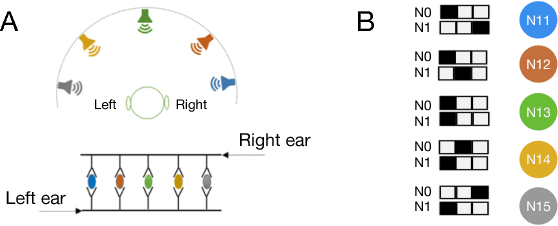

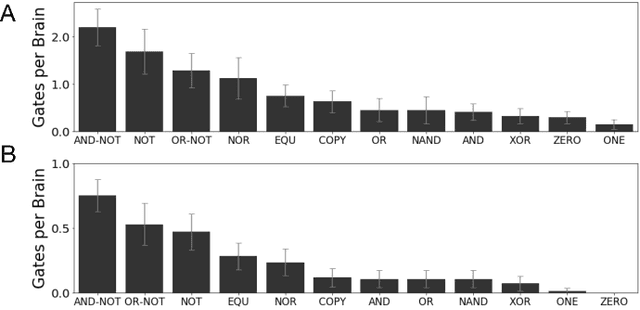

Finding the causes to observed effects and establishing causal relationships between events is (and has been) an essential element of science and philosophy. Automated methods that can detect causal relationships would be very welcome, but practical methods that can infer causality are difficult to find, and the subject of ongoing research. While Shannon information only detects correlation, there are several information-theoretic notions of "directed information" that have successfully detected causality in some systems, in particular in the neuroscience community. However, recent work has shown that some directed information measures can sometimes inadequately estimate the extent of causal relations, or even fail to identify existing cause-effect relations between components of systems, especially if neurons contribute in a cryptographic manner to influence the effector neuron. Here, we test how often cryptographic logic emerges in an evolutionary process that generates artificial neural circuits for two fundamental cognitive tasks: motion detection and sound localization. Our results suggest that whether or not transfer entropy measures of causality are misleading depends strongly on the cognitive task considered. These results emphasize the importance of understanding the fundamental logic processes that contribute to cognitive processing, and quantifying their relevance in any given nervous system.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge