Calliope -- A Polyphonic Music Transformer

Paper and Code

Jul 08, 2021

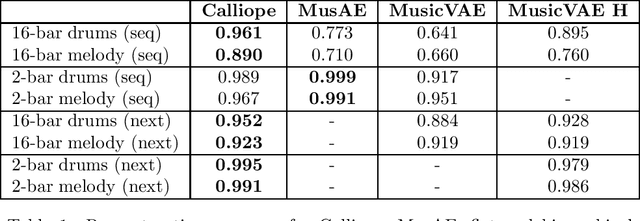

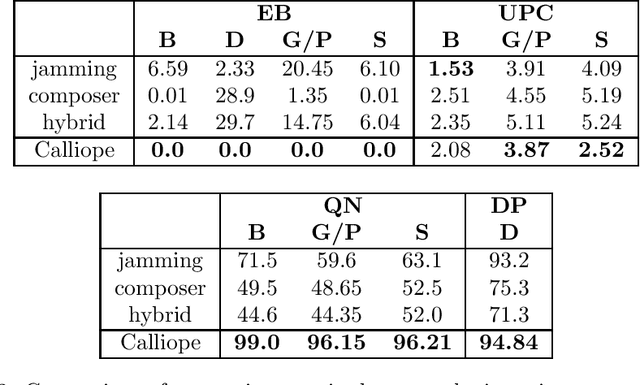

The polyphonic nature of music makes the application of deep learning to music modelling a challenging task. On the other hand, the Transformer architecture seems to be a good fit for this kind of data. In this work, we present Calliope, a novel autoencoder model based on Transformers for the efficient modelling of multi-track sequences of polyphonic music. The experiments show that our model is able to improve the state of the art on musical sequence reconstruction and generation, with remarkably good results especially on long sequences.

* Accepted at ESANN2021

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge