Brief Introduction to Contrastive Learning Pretext Tasks for Visual Representation

Paper and Code

Oct 06, 2022

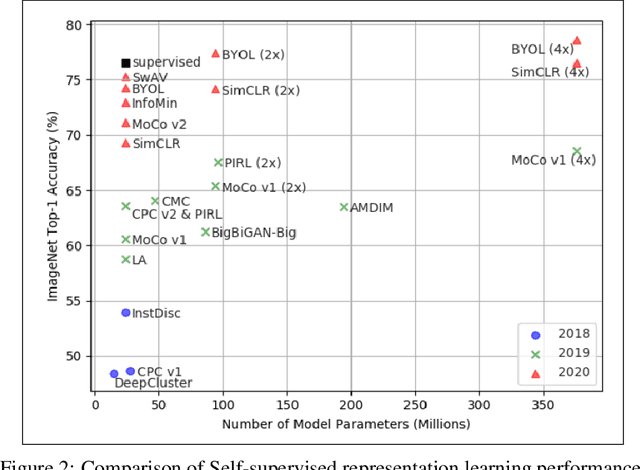

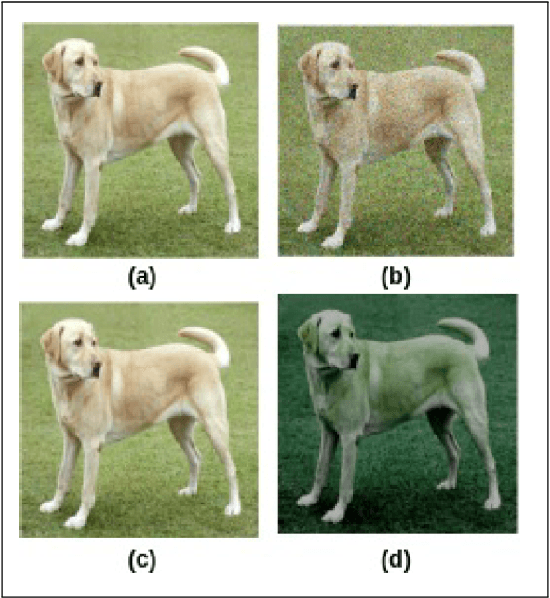

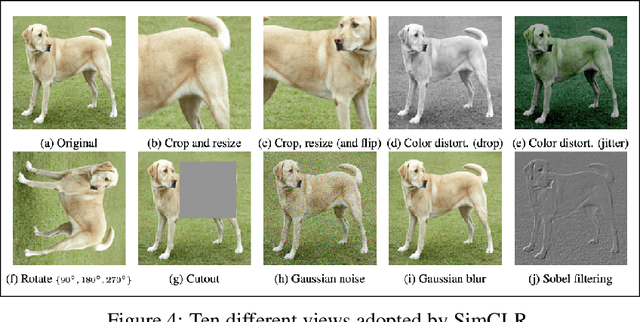

To improve performance in visual feature representation from photos or videos for practical applications, we generally require large-scale human-annotated labeled data while training deep neural networks. However, the cost of gathering and annotating human-annotated labeled data is expensive. Given that there is a lot of unlabeled data in the actual world, it is possible to introduce self-defined pseudo labels as supervisions to prevent this issue. Self-supervised learning, specifically contrastive learning, is a subset of unsupervised learning methods that has grown popular in computer vision, natural language processing, and other domains. The purpose of contrastive learning is to embed augmented samples from the same sample near to each other while pushing away those that are not. In the following sections, we will introduce the regular formulation among different learnings. In the next sections, we will discuss the regular formulation of various learnings. Furthermore, we offer some strategies from contrastive learning that have recently been published and are focused on pretext tasks for visual representation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge