Boundary Optimizing Network (BON)

Paper and Code

Jan 23, 2018

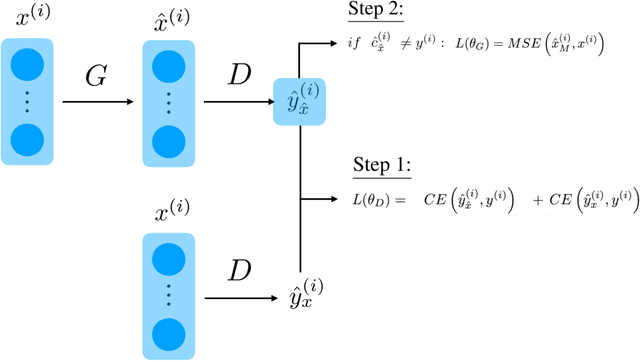

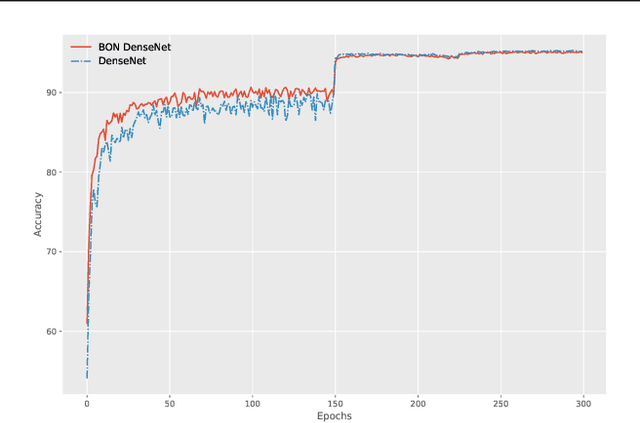

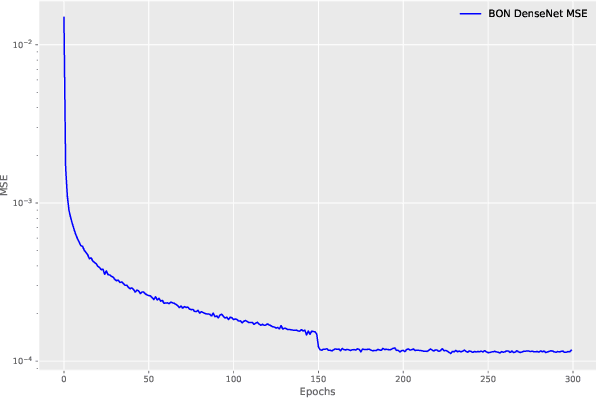

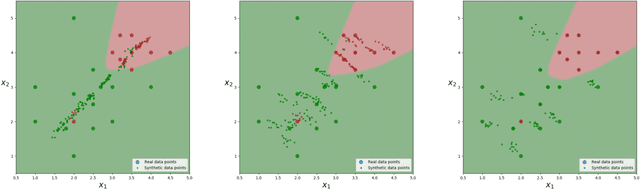

Despite all the success that deep neural networks have seen in classifying certain datasets, the challenge of finding optimal solutions that generalize still remains. In this paper, we propose the Boundary Optimizing Network (BON), a new approach to generalization for deep neural networks when used for supervised learning. Given a classification network, we propose to use a collaborative generative network that produces new synthetic data points in the form of perturbations of original data points. In this way, we create a data support around each original data point which prevents decision boundaries from passing too close to the original data points, i.e. prevents overfitting. We show that BON improves convergence on CIFAR-10 using the state-of-the-art Densenet. We do however observe that the generative network suffers from catastrophic forgetting during training, and we therefore propose to use a variation of Memory Aware Synapses to optimize the generative network (called BON++). On the Iris dataset, we visualize the effect of BON++ when the generator does not suffer from catastrophic forgetting and conclude that the approach has the potential to create better boundaries in a higher dimensional space.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge