Boggart: Accelerating Retrospective Video Analytics via Model-Agnostic Ingest Processing

Paper and Code

Jun 21, 2021

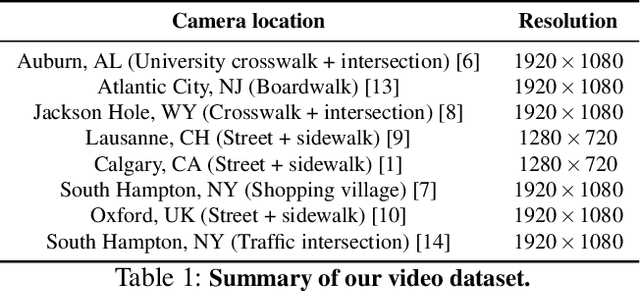

Delivering fast responses to retrospective queries on video datasets is difficult due to the large number of frames to consider and the high costs of running convolutional neural networks (CNNs) on each one. A natural solution is to perform a subset of the necessary computations ahead of time, as video is ingested. However, existing ingest-time systems require knowledge of the specific CNN that will be used in future queries -- a challenging requisite given the evergrowing space of CNN architectures and training datasets/methodologies. This paper presents Boggart, a retrospective video analytics system that delivers ingest-time speedups in a model-agnostic manner. Our underlying insight is that traditional computer vision (CV) algorithms are capable of performing computations that can be used to accelerate diverse queries with wide-ranging CNNs. Building on this, at ingest-time, Boggart carefully employs a variety of motion tracking algorithms to identify potential objects and their trajectories across frames. Then, at query-time, Boggart uses several novel techniques to collect the smallest sample of CNN results required to meet the target accuracy: (1) a clustering strategy to efficiently unearth the inevitable discrepancies between CV- and CNN-generated outputs, and (2) a set of accuracy-preserving propagation techniques to safely extend sampled results along each trajectory. Across many videos, CNNs, and queries Boggart consistently meets accuracy targets while using CNNs sparingly (on 3-54% of frames).

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge