Beyond the Gates of Euclidean Space: Temporal-Discrimination-Fusions and Attention-based Graph Neural Network for Human Activity Recognition

Paper and Code

Jun 10, 2022

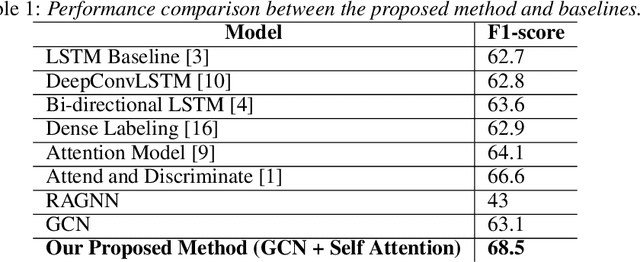

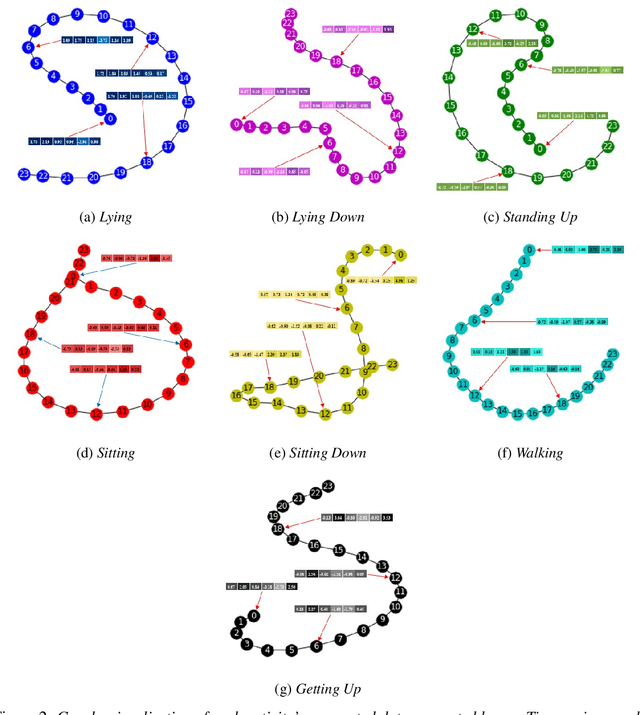

Human activity recognition (HAR) through wearable devices has received much interest due to its numerous applications in fitness tracking, wellness screening, and supported living. As a result, we have seen a great deal of work in this field. Traditional deep learning (DL) has set a state of the art performance for HAR domain. However, it ignores the data's structure and the association between consecutive time stamps. To address this constraint, we offer an approach based on Graph Neural Networks (GNNs) for structuring the input representation and exploiting the relations among the samples. However, even when using a simple graph convolution network to eliminate this shortage, there are still several limiting factors, such as inter-class activities issues, skewed class distribution, and a lack of consideration for sensor data priority, all of which harm the HAR model's performance. To improve the current HAR model's performance, we investigate novel possibilities within the framework of graph structure to achieve highly discriminated and rich activity features. We propose a model for (1) time-series-graph module that converts raw data from HAR dataset into graphs; (2) Graph Convolutional Neural Networks (GCNs) to discover local dependencies and correlations between neighboring nodes; and (3) self-attention GNN encoder to identify sensors interactions and data priorities. To the best of our knowledge, this is the first work for HAR, which introduces a GNN-based approach that incorporates both the GCN and the attention mechanism. By employing a uniform evaluation method, our framework significantly improves the performance on hospital patient's activities dataset comparatively considered other state of the art baseline methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge