BEV Lane Det: Fast Lane Detection on BEV Ground

Paper and Code

Oct 12, 2022

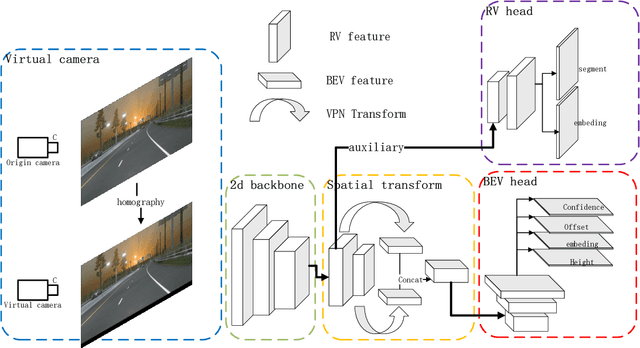

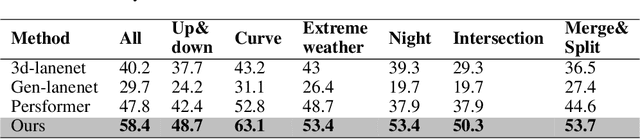

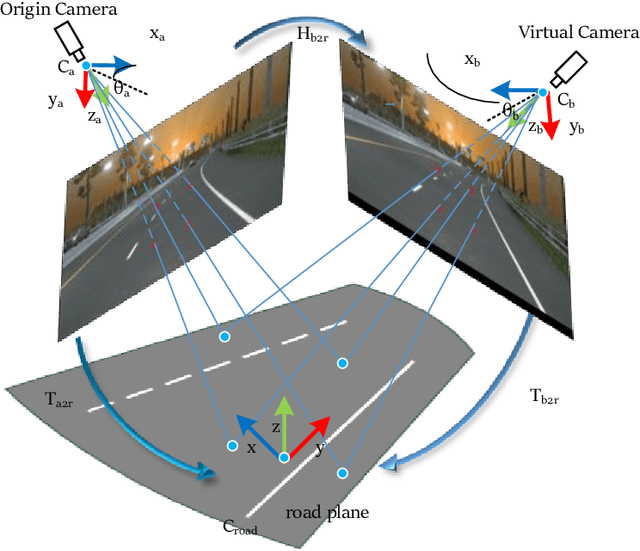

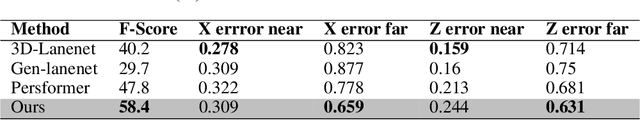

Recently, 3D lane detection has been an actively developing area in autonomous driving which is the key to routing the vehicle. This work proposes a deployment-oriented monocular 3D lane detector with only naive CNN and FC layers. This detector achieved state-of-the-art results on the Apollo 3D Lane Synthetic dataset and OpenLane real-world dataset with 96 FPS runtime speed. We conduct three techniques in our detector: (1) Virtual Camera eliminates the difference in poses of cameras mounted on different vehicles. (2) Spatial Feature Pyramid Transform as a light-weighed image-view to bird-eye view transformer can utilize scales of image-view featmaps. (3) Yolo Style Lane Representation makes a good balance between bird-eye view resolution and runtime speed. Meanwhile, it can reduce the inefficiency caused by the class imbalance due to the sparsity of the lane detection task during training. Combining these three techniques, we obtained a 58.4% F1-score on the OpenLane dataset, which is a 10.6% improvement over the baseline. On the Apollo dataset, we achieved an F1-score of 96.9%, which is 4% points of supremacy over the best on the leaderboard. The source code will release soon.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge