Benchmark Evaluation of Image Fusion algorithms for Smartphone Camera Capture

Paper and Code

Jun 29, 2024

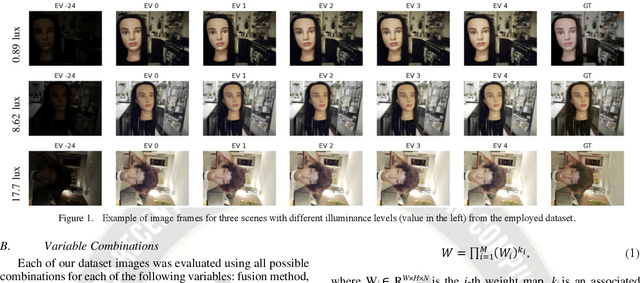

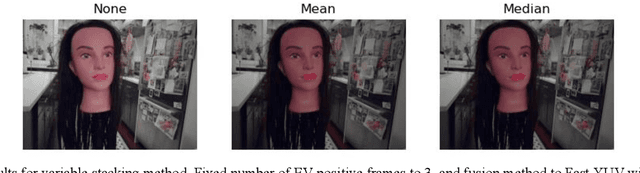

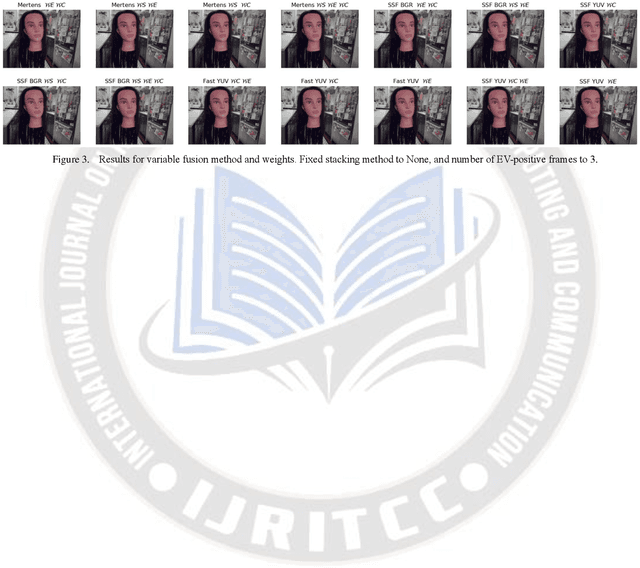

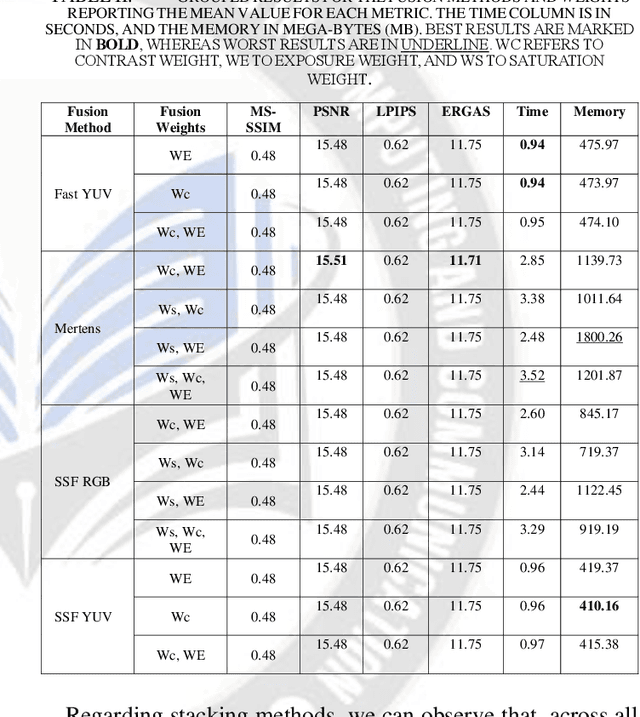

This paper investigates the trade-off between computational resource utilization and image quality in the context of image fusion techniques for smartphone camera capture. The study explores various combinations of fusion methods, fusion weights, number of frames, and stacking (a.k.a. merging) techniques using a proprietary dataset of images captured with Motorola smartphones. The objective was to identify optimal configurations that balance computational efficiency with image quality. Our results indicate that multi-scale methods and their single-scale fusion counterparts return similar image quality measures and runtime, but single-scale ones have lower memory usage. Furthermore, we identified that fusion methods operating in the YUV color space yield better performance in terms of image quality, resource utilization, and runtime. The study also shows that fusion weights have an overall small impact on image quality, runtime, and memory. Moreover, our results reveal that increasing the number of highly exposed input frames does not necessarily improve image quality and comes with a corresponding increase in computational resources usage and runtime; and that stacking methods, although reducing memory usage, may compromise image quality. Finally, our work underscores the importance of thoughtful configuration selection for image fusion techniques in constrained environments and offers insights for future image fusion method development, particularly in the realm of smartphone applications.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge