Backdoor attacks on DNN and GBDT -- A Case Study from the insurance domain

Paper and Code

Dec 11, 2024

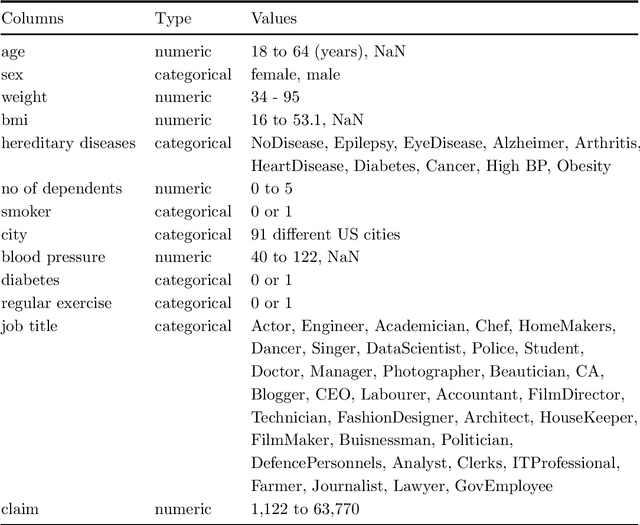

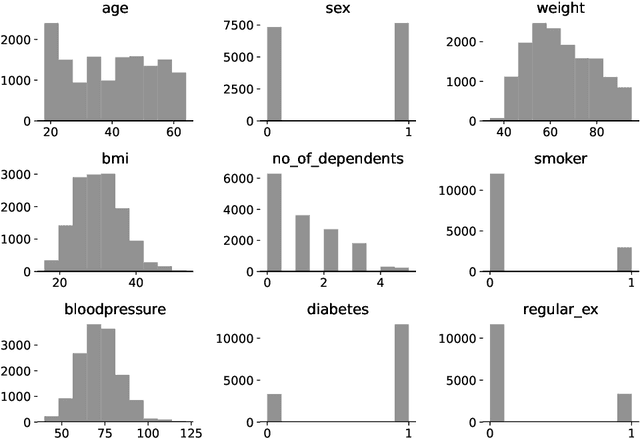

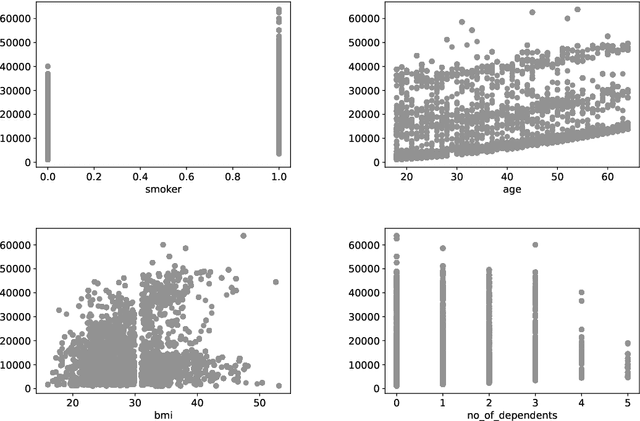

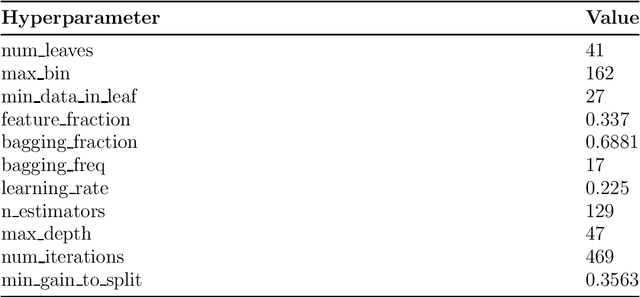

Machine learning (ML) will likely play a large role in many processes in the future, also for insurance companies. However, ML models are at risk of being attacked and manipulated. In this work, the robustness of Gradient Boosted Decision Tree (GBDT) models and Deep Neural Networks (DNN) within an insurance context will be evaluated. Therefore, two GBDT models and two DNNs are trained on two different tabular datasets from an insurance context. Past research in this domain mainly used homogenous data and there are comparably few insights regarding heterogenous tabular data. The ML tasks performed on the datasets are claim prediction (regression) and fraud detection (binary classification). For the backdoor attacks different samples containing a specific pattern were crafted and added to the training data. It is shown, that this type of attack can be highly successful, even with a few added samples. The backdoor attacks worked well on the models trained on one dataset but poorly on the models trained on the other. In real-world scenarios the attacker will have to face several obstacles but as attacks can work with very few added samples this risk should be evaluated.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge