Automatic Surface Area and Volume Prediction on Ellipsoidal Ham using Deep Learning

Paper and Code

Jan 15, 2019

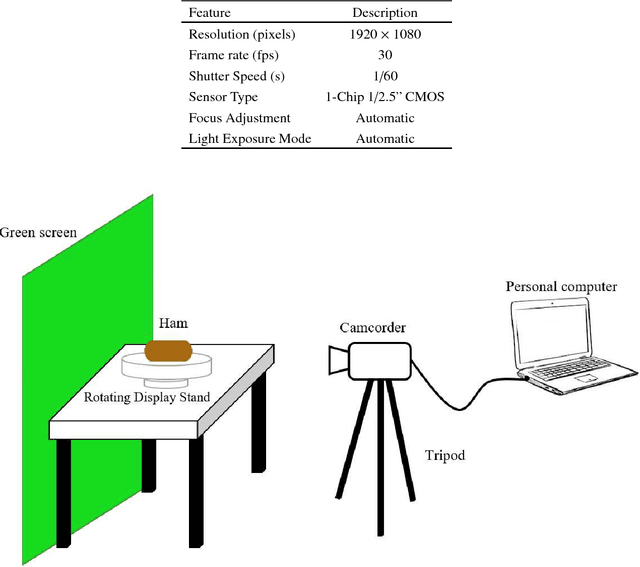

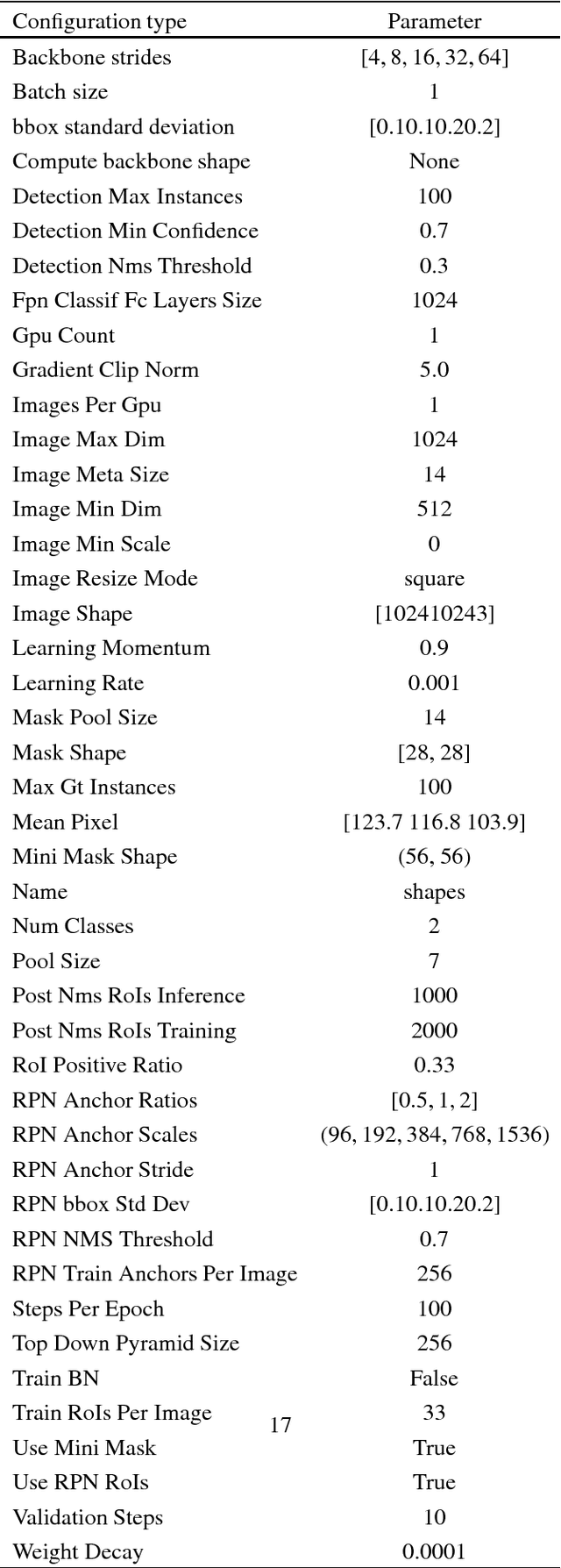

This paper presents novel methods to predict the surface and volume of the ham through a camera. This implies that the conventional weight measurement to obtain in the object's volume can be neglected and hence it is economically effective. Both of the measurements are obtained in the following two ways: manually and automatically. The former is assume as the true or exact measurement and the latter is through a computer vision technique with some geometrical analysis that includes mathematical derived functions. For the automatic implementation, most of the existing approaches extract the features of the food material based on handcrafted features and to the best of our knowledge this is the first attempt to estimate the surface area and volume on ham with deep learning features. We address the estimation task with a Mask Region-based CNN (Mask R-CNN) approach, which well performs the ham detection and semantic segmentation from a video. The experimental results demonstrate that the algorithm proposed is robust as promising surface area and volume estimation are obtained for two angles of the ellipsoidal ham (i.e., horizontal and vertical positions). Specifically, in the vertical ham point of view, it achieves an overall accuracy up to 95% whereas the horizontal ham reaches 80% of accuracy.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge