Automatic non-invasive Cough Detection based on Accelerometer and Audio Signals

Paper and Code

Aug 31, 2021

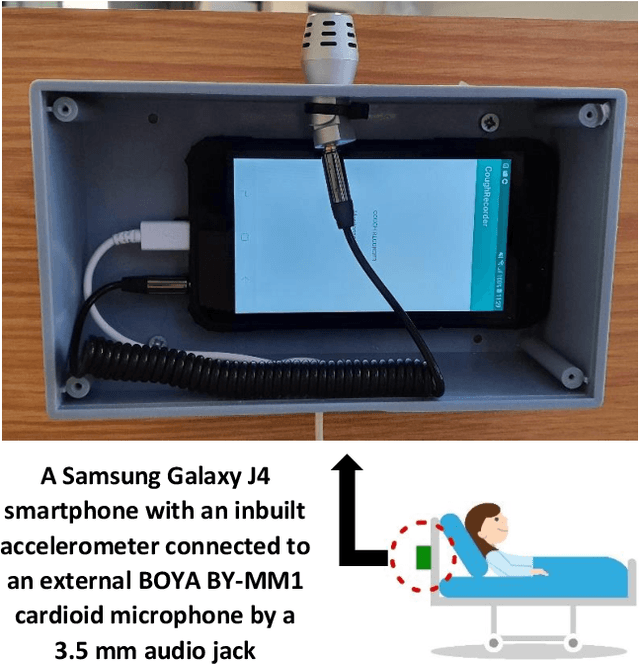

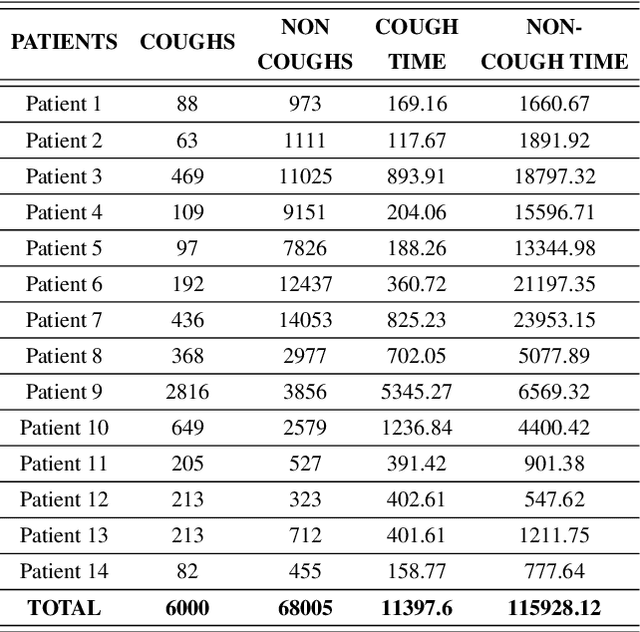

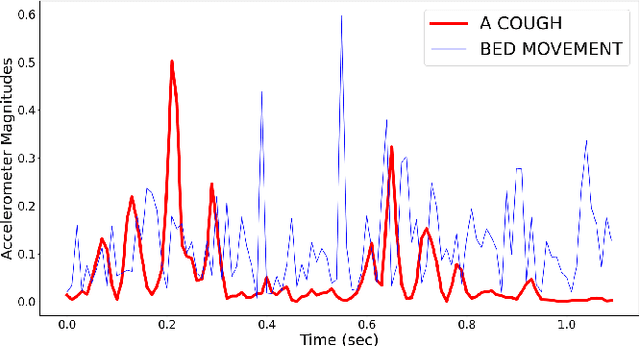

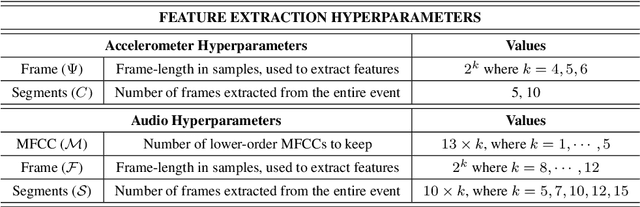

We present an automatic non-invasive way of detecting cough events based on both accelerometer and audio signals. The acceleration signals are captured by a smartphone firmly attached to the patient's bed, using its integrated accelerometer. The audio signals are captured simultaneously by the same smartphone using an external microphone. We have compiled a manually-annotated dataset containing such simultaneously-captured acceleration and audio signals for approximately 6000 cough and 68000 non-cough events from 14 adult male patients in a tuberculosis clinic. LR, SVM and MLP are evaluated as baseline classifiers and compared with deep architectures such as CNN, LSTM, and Resnet50 using a leave-one-out cross-validation scheme. We find that the studied classifiers can use either acceleration or audio signals to distinguish between coughing and other activities including sneezing, throat-clearing, and movement on the bed with high accuracy. However, in all cases, the deep neural networks outperform the shallow classifiers by a clear margin and the Resnet50 offers the best performance by achieving an AUC exceeding 0.98 and 0.99 for acceleration and audio signals respectively. While audio-based classification consistently offers a better performance than acceleration-based classification, we observe that the difference is very small for the best systems. Since the acceleration signal requires less processing power, and since the need to record audio is sidestepped and thus privacy is inherently secured, and since the recording device is attached to the bed and not worn, an accelerometer-based highly accurate non-invasive cough detector may represent a more convenient and readily accepted method in long-term cough monitoring.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge