Augmenting Deep Learning Adaptation for Wearable Sensor Data through Combined Temporal-Frequency Image Encoding

Paper and Code

Jul 03, 2023

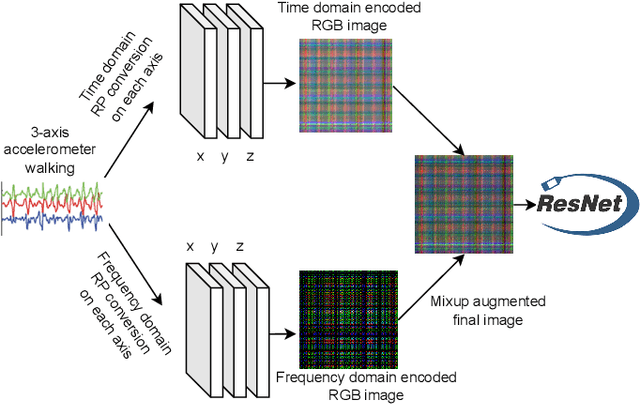

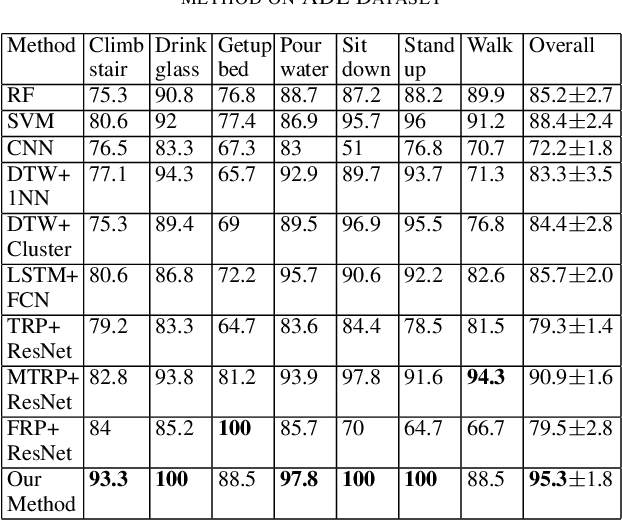

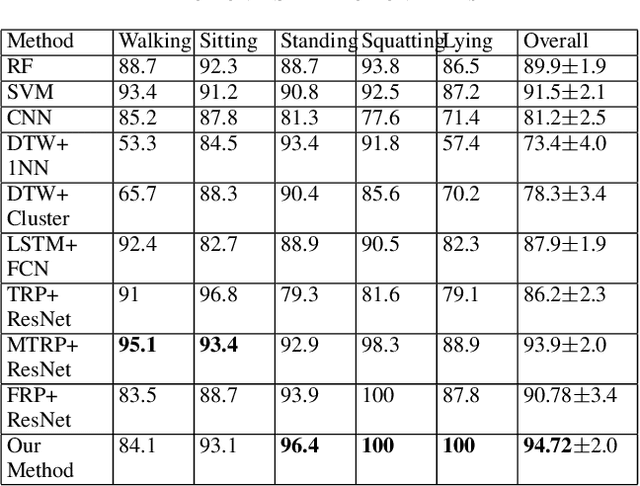

Deep learning advancements have revolutionized scalable classification in many domains including computer vision. However, when it comes to wearable-based classification and domain adaptation, existing computer vision-based deep learning architectures and pretrained models trained on thousands of labeled images for months fall short. This is primarily because wearable sensor data necessitates sensor-specific preprocessing, architectural modification, and extensive data collection. To overcome these challenges, researchers have proposed encoding of wearable temporal sensor data in images using recurrent plots. In this paper, we present a novel modified-recurrent plot-based image representation that seamlessly integrates both temporal and frequency domain information. Our approach incorporates an efficient Fourier transform-based frequency domain angular difference estimation scheme in conjunction with the existing temporal recurrent plot image. Furthermore, we employ mixup image augmentation to enhance the representation. We evaluate the proposed method using accelerometer-based activity recognition data and a pretrained ResNet model, and demonstrate its superior performance compared to existing approaches.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge