At First Sight: Zero-Shot Classification of Astronomical Images with Large Multimodal Models

Paper and Code

Jun 24, 2024

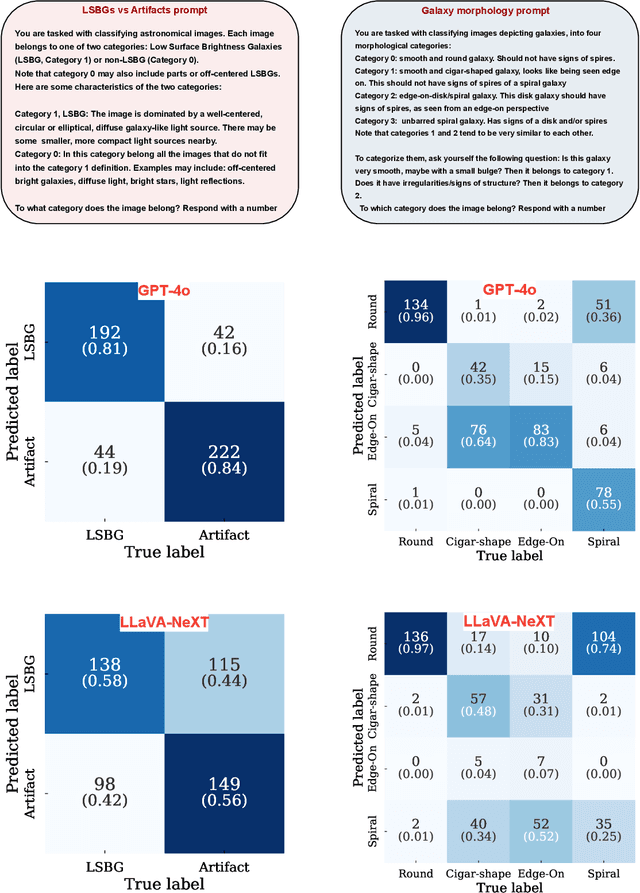

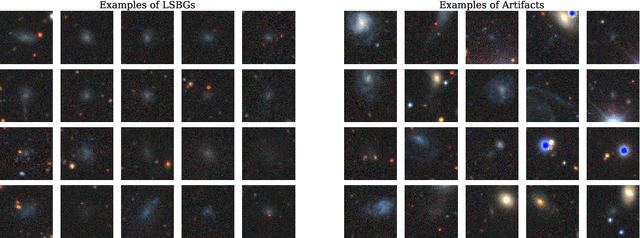

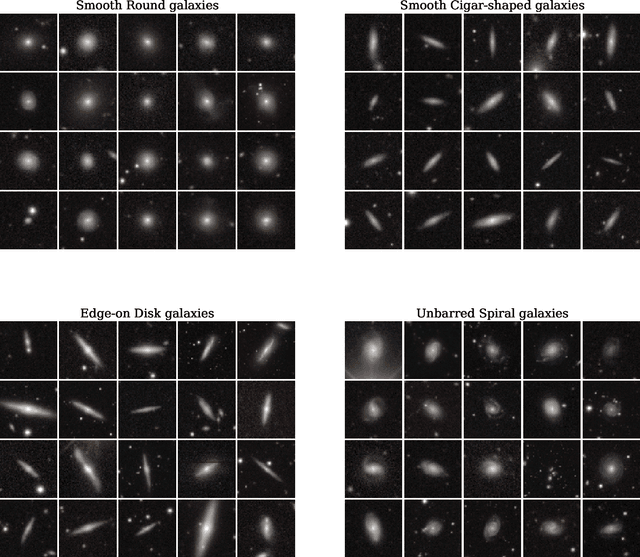

Vision-Language multimodal Models (VLMs) offer the possibility for zero-shot classification in astronomy: i.e. classification via natural language prompts, with no training. We investigate two models, GPT-4o and LLaVA-NeXT, for zero-shot classification of low-surface brightness galaxies and artifacts, as well as morphological classification of galaxies. We show that with natural language prompts these models achieved significant accuracy (above 80 percent typically) without additional training/fine tuning. We discuss areas that require improvement, especially for LLaVA-NeXT, which is an open source model. Our findings aim to motivate the astronomical community to consider VLMs as a powerful tool for both research and pedagogy, with the prospect that future custom-built or fine-tuned models could perform better.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge