Asymptotic performance of regularized multi-task learning

Paper and Code

May 31, 2018

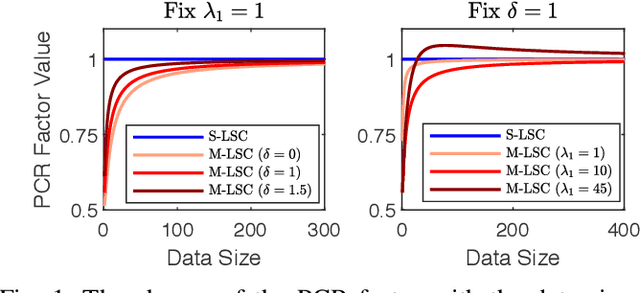

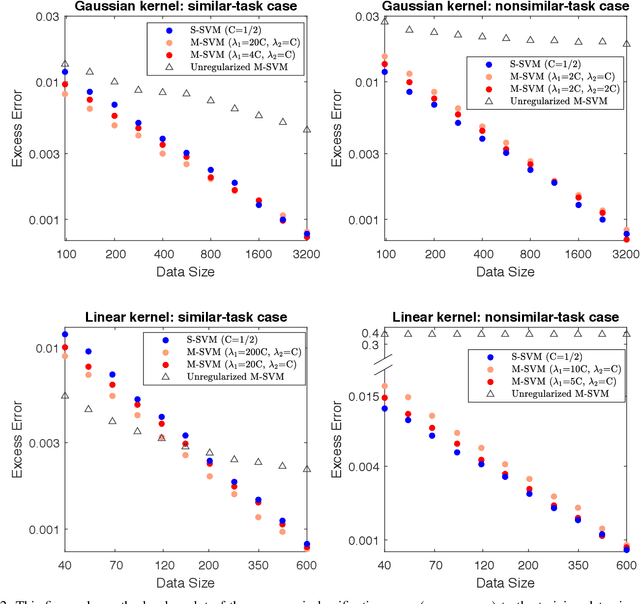

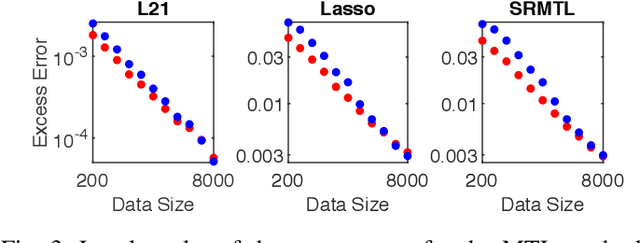

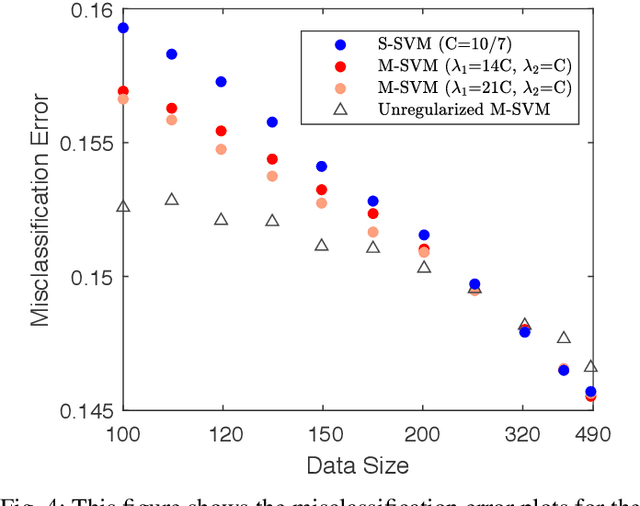

This paper analyzes asymptotic performance of a regularized multi-task learning model where task parameters are optimized jointly. If tasks are closely related, empirical work suggests multi-task learning models to outperform single-task ones in finite sample cases. As data size grows indefinitely, we show the learned multi-classifier to optimize an average misclassification error function which depicts the risk of applying multi-task learning algorithm to making decisions. This technique conclusion demonstrates the regularized multi-task learning model to be able to produce reliable decision rule for each task in the sense that it will asymptotically converge to the corresponding Bayes rule. Also, we find the interaction effect between tasks vanishes as data size growing indefinitely, which is quite different from the behavior in finite sample cases.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge