ast2vec: Utilizing Recursive Neural Encodings of Python Programs

Paper and Code

Mar 22, 2021

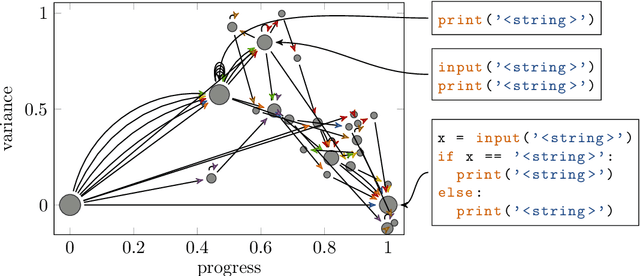

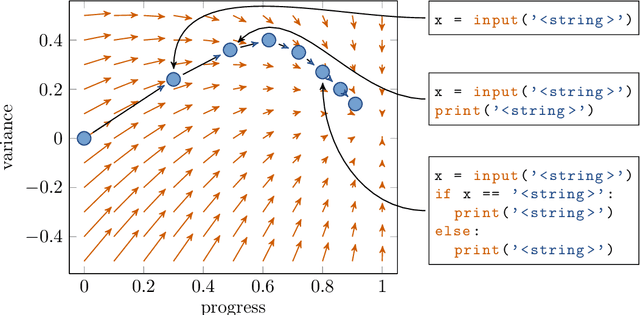

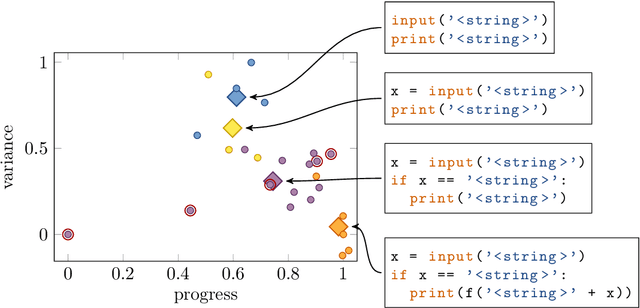

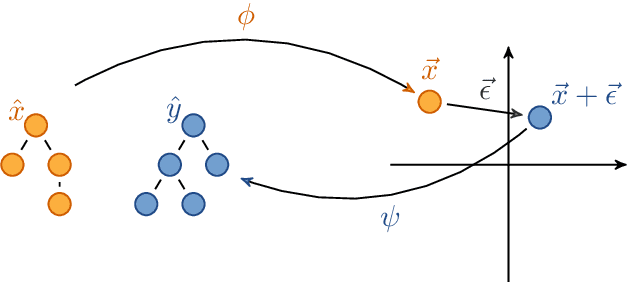

Educational datamining involves the application of datamining techniques to student activity. However, in the context of computer programming, many datamining techniques can not be applied because they expect vector-shaped input whereas computer programs have the form of syntax trees. In this paper, we present ast2vec, a neural network that maps Python syntax trees to vectors and back, thereby facilitating datamining on computer programs as well as the interpretation of datamining results. Ast2vec has been trained on almost half a million programs of novice programmers and is designed to be applied across learning tasks without re-training, meaning that users can apply it without any need for (additional) deep learning. We demonstrate the generality of ast2vec in three settings: First, we provide example analyses using ast2vec on a classroom-sized dataset, involving visualization, student motion analysis, clustering, and outlier detection, including two novel analyses, namely a progress-variance-projection and a dynamical systems analysis. Second, we consider the ability of ast2vec to recover the original syntax tree from its vector representation on the training data and two further large-scale programming datasets. Finally, we evaluate the predictive capability of a simple linear regression on top of ast2vec, obtaining similar results to techniques that work directly on syntax trees. We hope ast2vec can augment the educational datamining toolbelt by making analyses of computer programs easier, richer, and more efficient.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge