Associative Adversarial Networks

Paper and Code

Nov 18, 2016

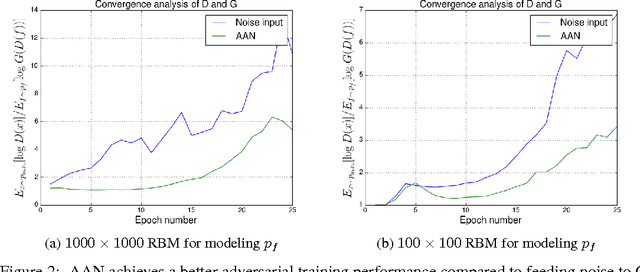

We propose a higher-level associative memory for learning adversarial networks. Generative adversarial network (GAN) framework has a discriminator and a generator network. The generator (G) maps white noise (z) to data samples while the discriminator (D) maps data samples to a single scalar. To do so, G learns how to map from high-level representation space to data space, and D learns to do the opposite. We argue that higher-level representation spaces need not necessarily follow a uniform probability distribution. In this work, we use Restricted Boltzmann Machines (RBMs) as a higher-level associative memory and learn the probability distribution for the high-level features generated by D. The associative memory samples its underlying probability distribution and G learns how to map these samples to data space. The proposed associative adversarial networks (AANs) are generative models in the higher-levels of the learning, and use adversarial non-stochastic models D and G for learning the mapping between data and higher-level representation spaces. Experiments show the potential of the proposed networks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge