Assessing learned features of Deep Learning applied to EEG

Paper and Code

Nov 08, 2021

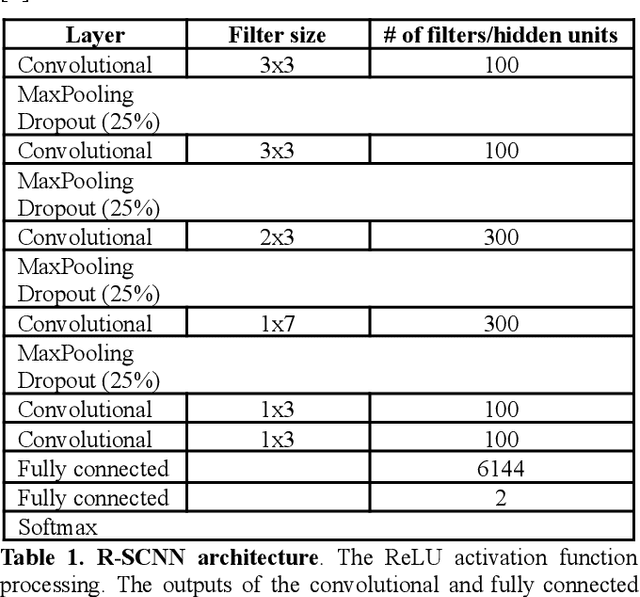

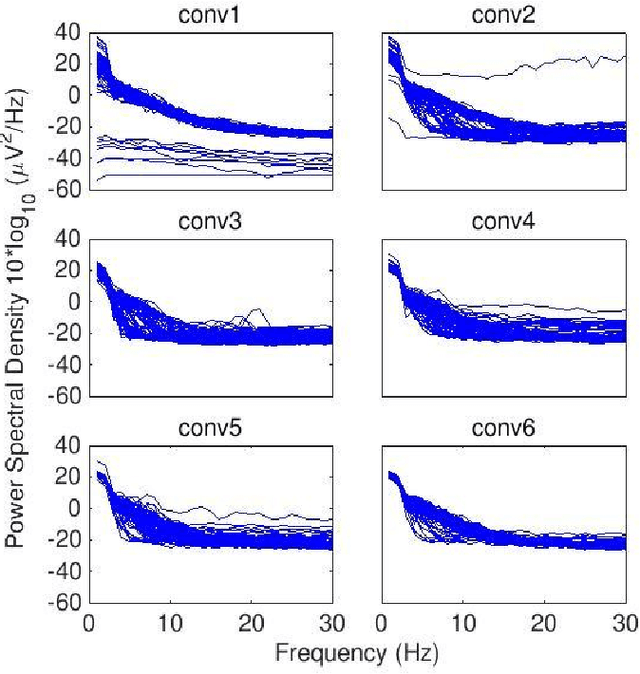

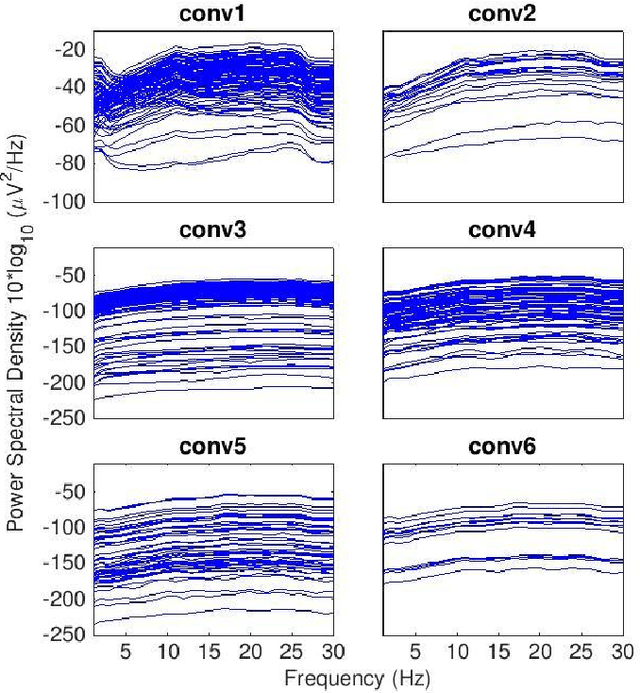

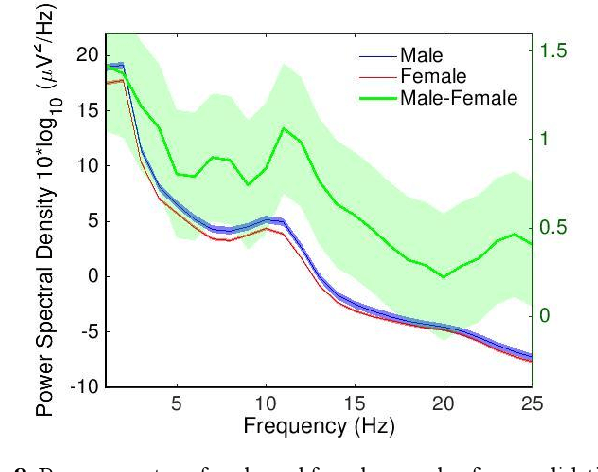

Convolutional Neural Networks (CNNs) have achieved impressive performance on many computer vision related tasks, such as object detection, image recognition, image retrieval, etc. These achievements benefit from the CNNs' outstanding capability to learn discriminative features with deep layers of neuron structures and iterative training process. This has inspired the EEG research community to adopt CNN in performing EEG classification tasks. However, CNNs learned features are not immediately interpretable, causing a lack of understanding of the CNNs' internal working mechanism. To improve CNN interpretability, CNN visualization methods are applied to translate the internal features into visually perceptible patterns for qualitative analysis of CNN layers. Many CNN visualization methods have been proposed in the Computer Vision literature to interpret the CNN network structure, operation, and semantic concept, yet applications to EEG data analysis have been limited. In this work we use 3 different methods to extract EEG-relevant features from a CNN trained on raw EEG data: optimal samples for each classification category, activation maximization, and reverse convolution. We applied these methods to a high-performing Deep Learning model with state-of-the-art performance for an EEG sex classification task, and show that the model features a difference in the theta frequency band. We show that visualization of a CNN model can reveal interesting EEG results. Using these tools, EEG researchers using Deep Learning can better identify the learned EEG features, possibly identifying new class relevant biomarkers.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge