Arithmetic-Intensity-Guided Fault Tolerance for Neural Network Inference on GPUs

Paper and Code

Apr 19, 2021

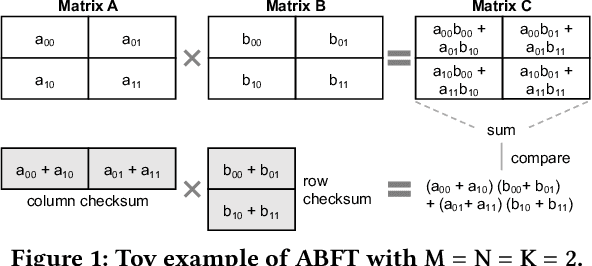

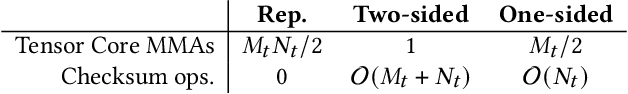

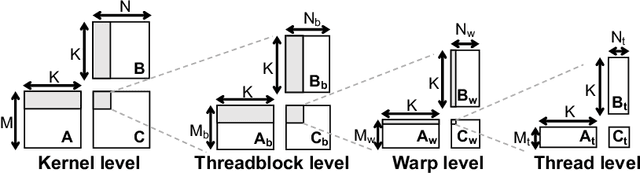

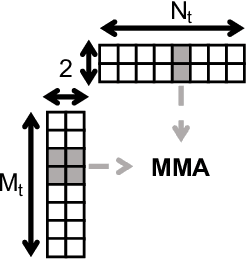

Neural networks (NNs) are increasingly employed in domains that require high reliability, such as scientific computing and safety-critical systems, as well as in environments more prone to unreliability (e.g., soft errors), such as on spacecraft. As recent work has shown that faults in NN inference can lead to mispredictions and safety hazards, it is critical to impart fault tolerance to NN inference. Algorithm-based fault tolerance (ABFT) is emerging as an appealing approach for efficient fault tolerance in NNs. In this work, we identify new, unexploited opportunities for low-overhead ABFT for NN inference: current inference-optimized GPUs have high compute-to-memory-bandwidth ratios, while many layers of current and emerging NNs have low arithmetic intensity. This leaves many convolutional and fully-connected layers in NNs memory-bandwidth-bound. These layers thus exhibit stalls in computation that could be filled by redundant execution, but that current approaches to ABFT for NN inference cannot exploit. To reduce execution-time overhead for such memory-bandwidth-bound layers, we first investigate thread-level ABFT schemes for inference-optimized GPUs that exploit this fine-grained compute underutilization. We then propose intensity-guided ABFT, an adaptive, arithmetic-intensity-guided approach to ABFT that selects the best ABFT scheme for each individual layer between traditional approaches to ABFT, which are suitable for compute-bound layers, and thread-level ABFT, which is suitable for memory-bandwidth-bound layers. Through this adaptive approach, intensity-guided ABFT reduces execution-time overhead by 1.09--5.3$\times$ across a variety of NNs, lowering the cost of fault tolerance for current and future NN inference workloads.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge