Appropriate Fairness Perceptions? On the Effectiveness of Explanations in Enabling People to Assess the Fairness of Automated Decision Systems

Paper and Code

Aug 14, 2021

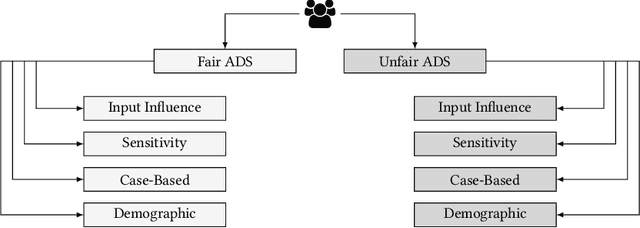

It is often argued that one goal of explaining automated decision systems (ADS) is to facilitate positive perceptions (e.g., fairness or trustworthiness) of users towards such systems. This viewpoint, however, makes the implicit assumption that a given ADS is fair and trustworthy, to begin with. If the ADS issues unfair outcomes, then one might expect that explanations regarding the system's workings will reveal its shortcomings and, hence, lead to a decrease in fairness perceptions. Consequently, we suggest that it is more meaningful to evaluate explanations against their effectiveness in enabling people to appropriately assess the quality (e.g., fairness) of an associated ADS. We argue that for an effective explanation, perceptions of fairness should increase if and only if the underlying ADS is fair. In this in-progress work, we introduce the desideratum of appropriate fairness perceptions, propose a novel study design for evaluating it, and outline next steps towards a comprehensive experiment.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge