Apprenticeship Learning for Model Parameters of Partially Observable Environments

Paper and Code

Jun 27, 2012

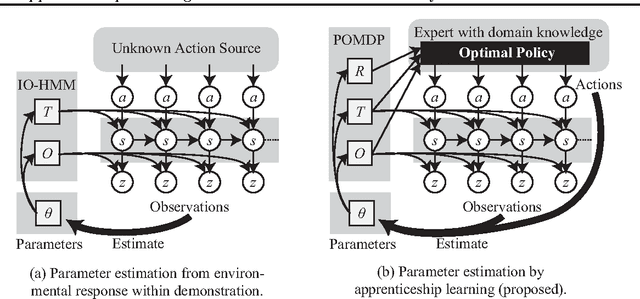

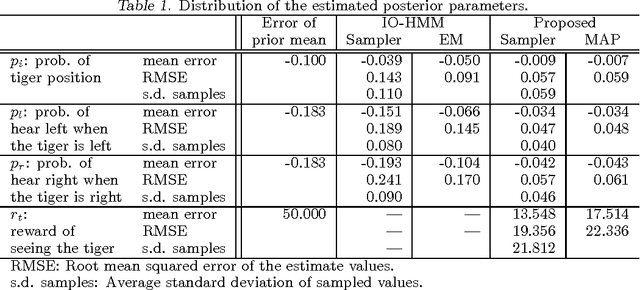

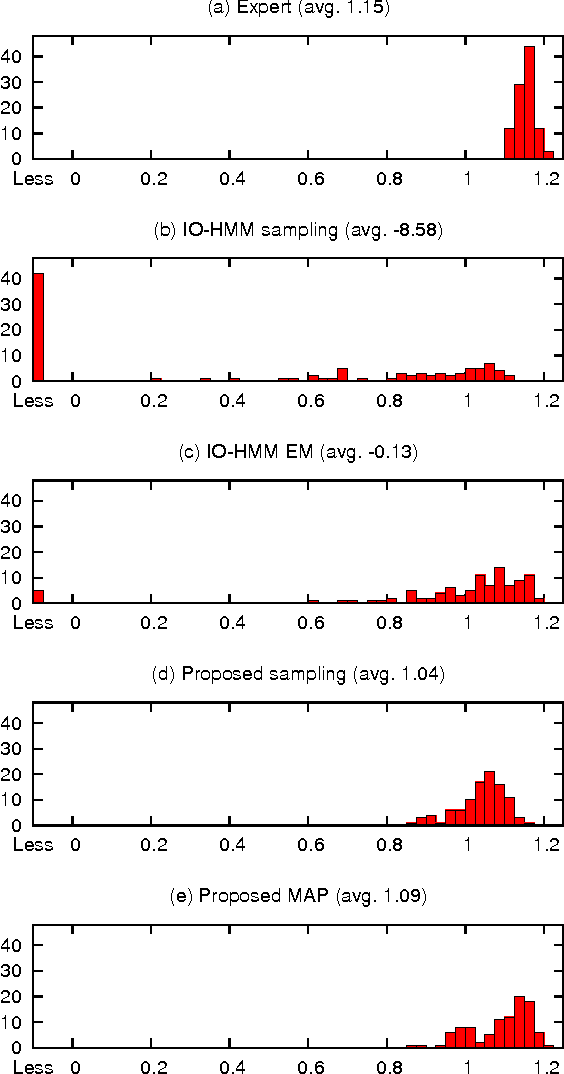

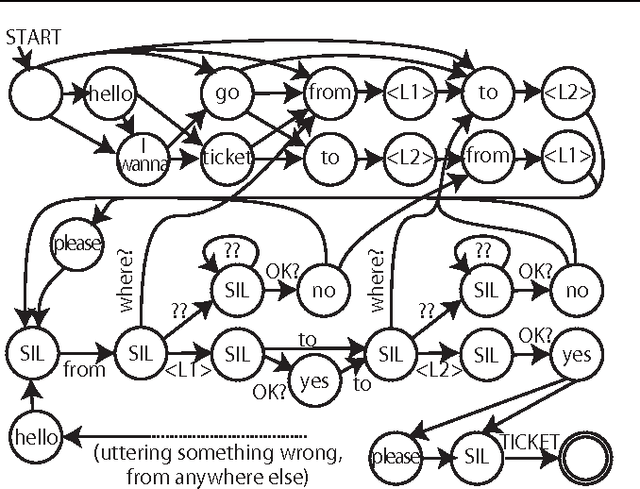

We consider apprenticeship learning, i.e., having an agent learn a task by observing an expert demonstrating the task in a partially observable environment when the model of the environment is uncertain. This setting is useful in applications where the explicit modeling of the environment is difficult, such as a dialogue system. We show that we can extract information about the environment model by inferring action selection process behind the demonstration, under the assumption that the expert is choosing optimal actions based on knowledge of the true model of the target environment. Proposed algorithms can achieve more accurate estimates of POMDP parameters and better policies from a short demonstration, compared to methods that learns only from the reaction from the environment.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge