Analyzing Representations inside Convolutional Neural Networks

Paper and Code

Dec 23, 2020

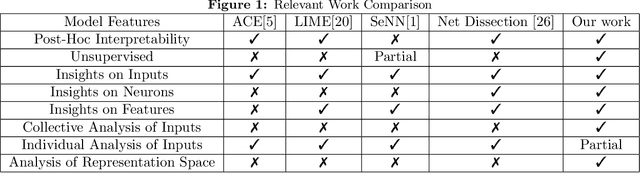

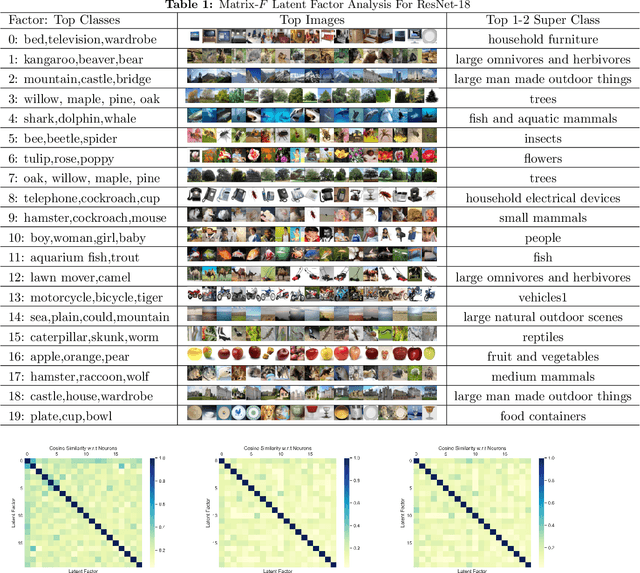

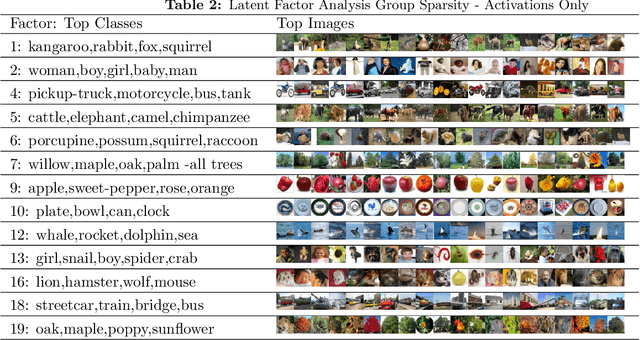

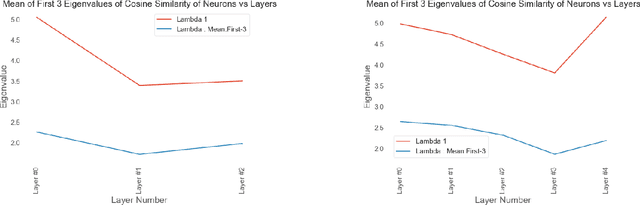

How can we discover and succinctly summarize the concepts that a neural network has learned? Such a task is of great importance in applications of networks in areas of inference that involve classification, like medical diagnosis based on fMRI/x-ray etc. In this work, we propose a framework to categorize the concepts a network learns based on the way it clusters a set of input examples, clusters neurons based on the examples they activate for, and input features all in the same latent space. This framework is unsupervised and can work without any labels for input features, it only needs access to internal activations of the network for each input example, thereby making it widely applicable. We extensively evaluate the proposed method and demonstrate that it produces human-understandable and coherent concepts that a ResNet-18 has learned on the CIFAR-100 dataset.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge