An Investigation of Environmental Influence on the Benefits of Adaptation Mechanisms in Evolutionary Swarm Robotics

Paper and Code

Apr 20, 2018

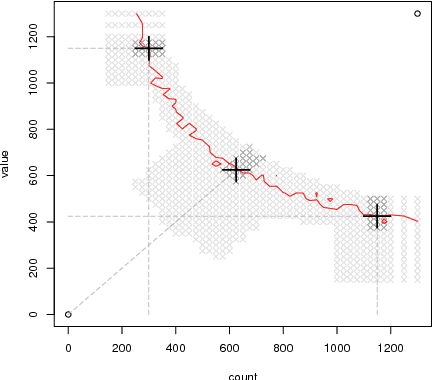

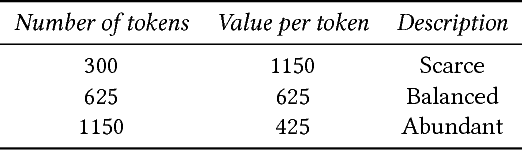

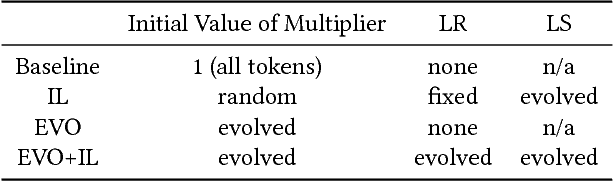

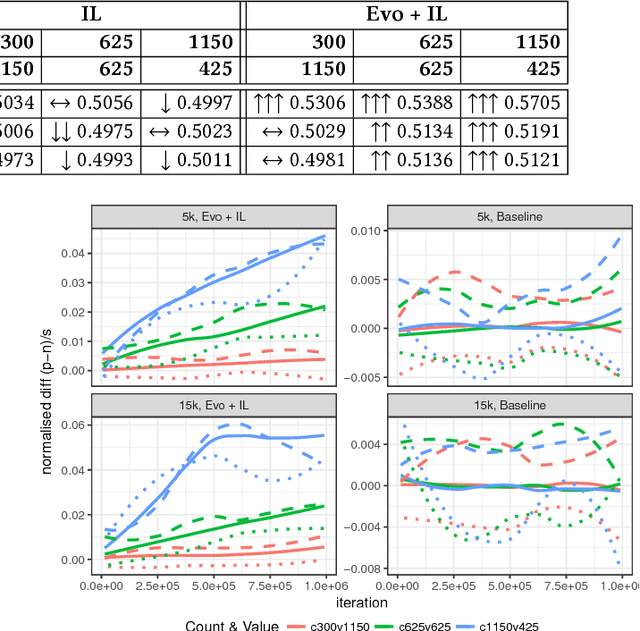

A robotic swarm that is required to operate for long periods in a potentially unknown environment can use both evolution and individual learning methods in order to adapt. However, the role played by the environment in influencing the effectiveness of each type of learning is not well understood. In this paper, we address this question by analysing the performance of a swarm in a range of simulated, dynamic environments where a distributed evolutionary algorithm for evolving a controller is augmented with a number of different individual learning mechanisms. The learning mechanisms themselves are defined by parameters which can be either fixed or inherited. We conduct experiments in a range of dynamic environments whose characteristics are varied so as to present different opportunities for learning. Results enable us to map environmental characteristics to the most effective learning algorithm.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge