An exact counterfactual-example-based approach to tree-ensemble models interpretability

Paper and Code

May 31, 2021

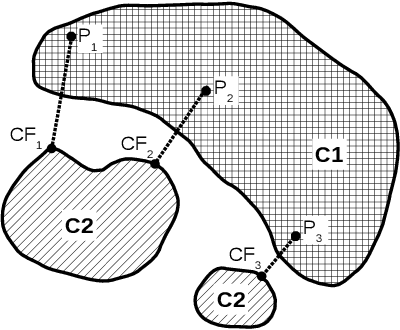

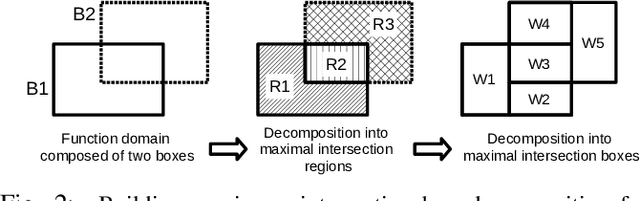

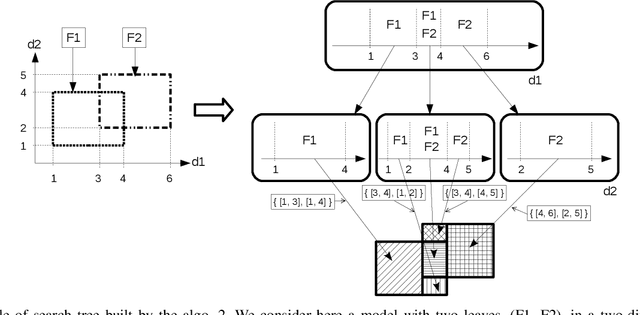

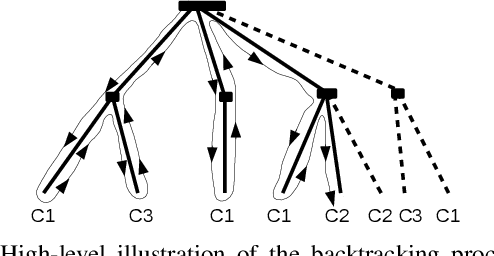

Explaining the decisions of machine learning models is becoming a necessity in many areas where trust in ML models decision is key to their accreditation/adoption. The ability to explain models decisions also allows to provide diagnosis in addition to the model decision, which is highly valuable in scenarios such as fault detection. Unfortunately, high-performance models do not exhibit the necessary transparency to make their decisions fully understandable. And the black-boxes approaches, which are used to explain such model decisions, suffer from a lack of accuracy in tracing back the exact cause of a model decision regarding a given input. Indeed, they do not have the ability to explicitly describe the decision regions of the model around that input, which is necessary to determine what influences the model towards one decision or the other. We thus asked ourselves the question: is there a category of high-performance models among the ones currently used for which we could explicitly and exactly characterise the decision regions in the input feature space using a geometrical characterisation? Surprisingly we came out with a positive answer for any model that enters the category of tree ensemble models, which encompasses a wide range of high-performance models such as XGBoost, LightGBM, random forests ... We could derive an exact geometrical characterisation of their decision regions under the form of a collection of multidimensional intervals. This characterisation makes it straightforward to compute the optimal counterfactual (CF) example associated with a query point. We demonstrate several possibilities of the approach, such as computing the CF example based only on a subset of features. This allows to obtain more plausible explanations by adding prior knowledge about which variables the user can control. An adaptation to CF reasoning on regression problems is also envisaged.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge