An Empirical Evaluation of Encoder Architectures for Fast Real-Time Long Conversational Understanding

Paper and Code

Feb 18, 2025

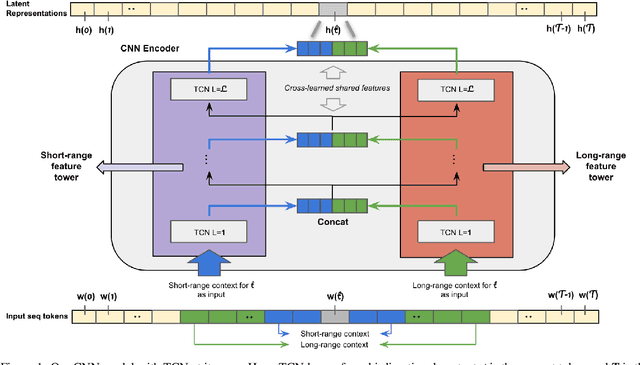

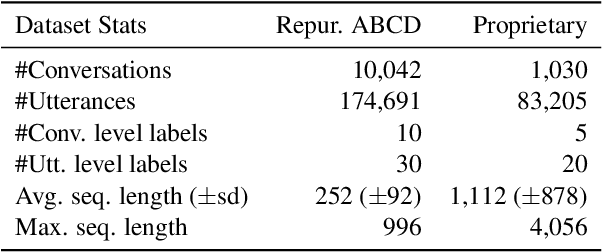

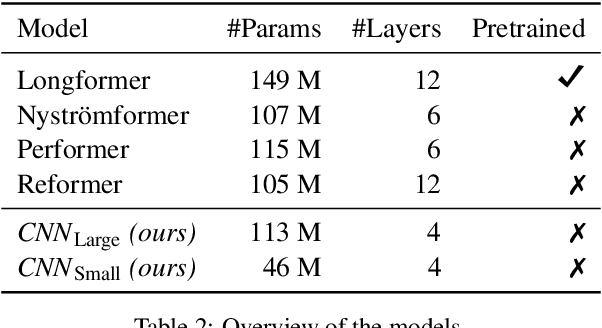

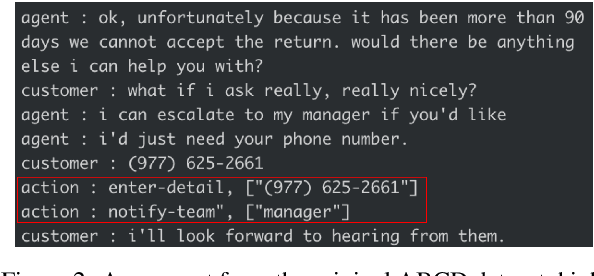

Analyzing long text data such as customer call transcripts is a cost-intensive and tedious task. Machine learning methods, namely Transformers, are leveraged to model agent-customer interactions. Unfortunately, Transformers adhere to fixed-length architectures and their self-attention mechanism scales quadratically with input length. Such limitations make it challenging to leverage traditional Transformers for long sequence tasks, such as conversational understanding, especially in real-time use cases. In this paper we explore and evaluate recently proposed efficient Transformer variants (e.g. Performer, Reformer) and a CNN-based architecture for real-time and near real-time long conversational understanding tasks. We show that CNN-based models are dynamic, ~2.6x faster to train, ~80% faster inference and ~72% more memory efficient compared to Transformers on average. Additionally, we evaluate the CNN model using the Long Range Arena benchmark to demonstrate competitiveness in general long document analysis.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge