An Alternative Practice of Tropical Convolution to Traditional Convolutional Neural Networks

Paper and Code

Mar 03, 2021

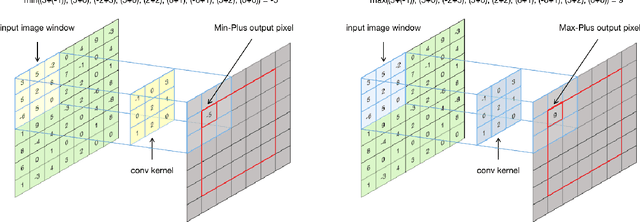

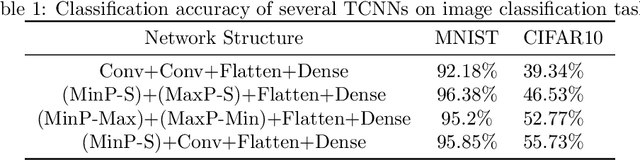

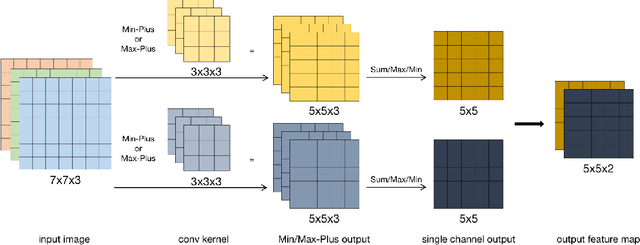

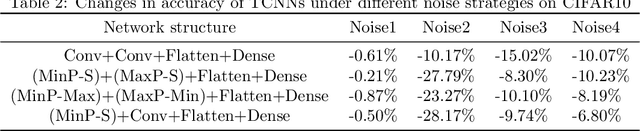

Convolutional neural networks (CNNs) have been used in many machine learning fields. In practical applications, the computational cost of convolutional neural networks is often high with the deepening of the network and the growth of data volume, mostly due to a large amount of multiplication operations of floating-point numbers in convolution operations. To reduce the amount of multiplications, we propose a new type of CNNs called Tropical Convolutional Neural Networks (TCNNs) which are built on tropical convolutions in which the multiplications and additions in conventional convolutional layers are replaced by additions and min/max operations respectively. In addition, since tropical convolution operators are essentially nonlinear operators, we expect TCNNs to have higher nonlinear fitting ability than conventional CNNs. In the experiments, we test and analyze several different architectures of TCNNs for image classification tasks in comparison with similar-sized conventional CNNs. The results show that TCNN can achieve higher expressive power than ordinary convolutional layers on the MNIST and CIFAR10 image data set. In different noise environments, there are wins and losses in the robustness of TCNN and ordinary CNNs.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge