An Accelerated Stochastic Algorithm for Solving the Optimal Transport Problem

Paper and Code

Mar 22, 2022

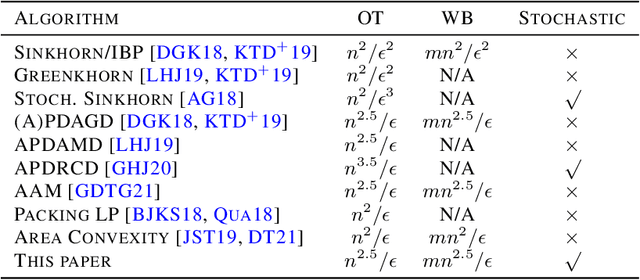

We propose a novel accelerated stochastic algorithm -- primal-dual accelerated stochastic gradient descent with variance reduction (PDASGD) -- for solving the optimal transport (OT) problem between two discrete distributions. PDASGD can also be utilized to compute for the Wasserstein barycenter (WB) of multiple discrete distributions. In both the OT and WB cases, the proposed algorithm enjoys the best-known convergence rate (in the form of order of computational complexity) in the literature. PDASGD is easy to implement in nature, due to its stochastic property: computation per iteration can be much faster than other non-stochastic counterparts. We carry out numerical experiments on both synthetic and real data; they demonstrate the improved efficiency of PDASGD.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge