AlphaDou: High-Performance End-to-End Doudizhu AI Integrating Bidding

Paper and Code

Jul 14, 2024

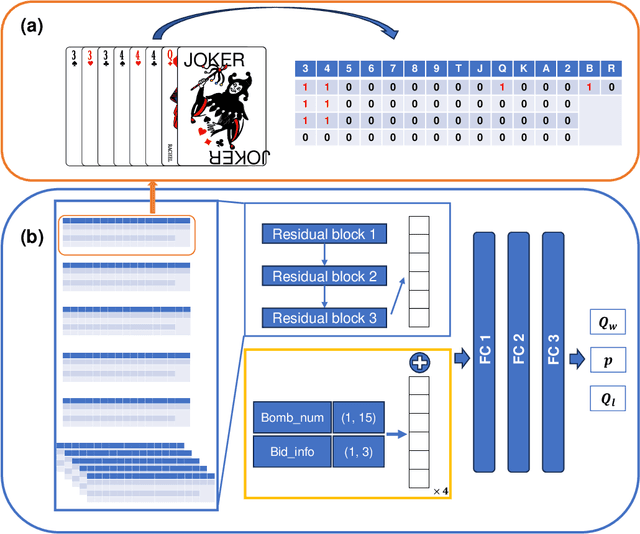

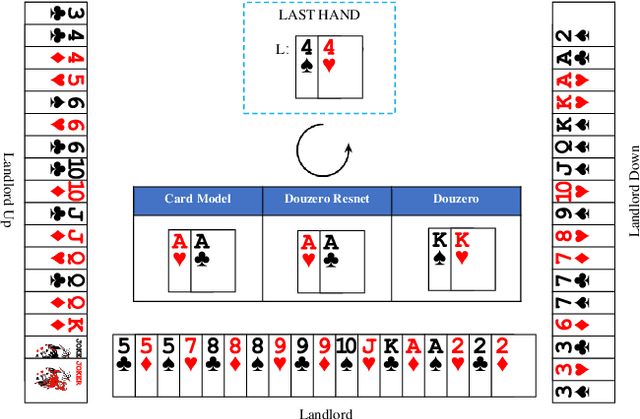

Artificial intelligence for card games has long been a popular topic in AI research. In recent years, complex card games like Mahjong and Texas Hold'em have been solved, with corresponding AI programs reaching the level of human experts. However, the game of Dou Di Zhu presents significant challenges due to its vast state/action space and unique characteristics involving reasoning about competition and cooperation, making the game extremely difficult to solve.The RL model DouZero, trained using the Deep Monte Carlo algorithm framework, has shown excellent performance in DouDiZhu. However, there are differences between its simplified game environment and the actual Dou Di Zhu environment, and its performance is still a considerable distance from that of human experts. This paper modifies the Deep Monte Carlo algorithm framework by using reinforcement learning to obtain a neural network that simultaneously estimates win rates and expectations. The action space is pruned using expectations, and strategies are generated based on win rates. This RL model is trained in a realistic DouDiZhu environment and achieves a state-of-the-art level among publicly available models.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge