All Local Minima are Global for Two-Layer ReLU Neural Networks: The Hidden Convex Optimization Landscape

Paper and Code

Jun 10, 2020

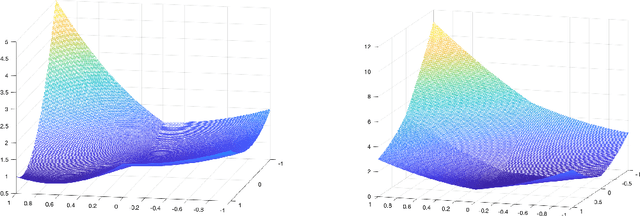

We are interested in two-layer ReLU neural networks from an optimization perspective. We prove that the path-connected sublevel set, i.e., valleys, of a neural network which is Clarke stationary with respect to the training loss with weight decay regularization contains a specific, simpler and more structured neural network, which we call its minimal representation. We provide an explicit construction of a continuous path between the neural network and its minimal counterpart. Importantly, we show that characterizing the optimality properties of a neural network can be reduced to characterizing those of its minimal representation. Thanks to the specific structure of minimal neural networks, we show that we can embed them into a convex optimization landscape. Leveraging convexity, we are able to (i) characterize the minimal size of the hidden layer so that the neural network optimization landscape has no spurious valleys and (ii) provide a polynomial-time algorithm for checking if a neural network is a global minimum of the training loss. Overall, we provide a rich framework for studying the landscape of the neural network training loss through our embedding to a convex optimization landscape.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge