Aligning Artificial Intelligence with Humans through Public Policy

Paper and Code

Jun 25, 2022

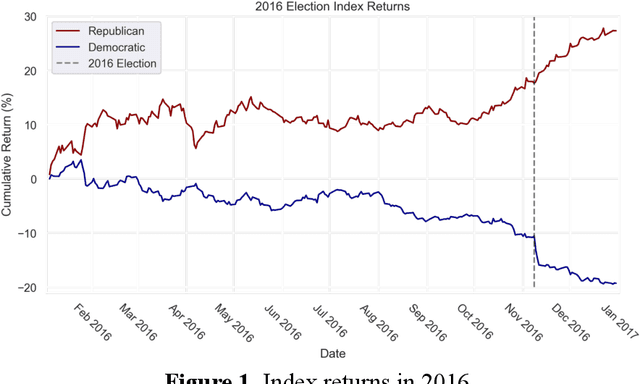

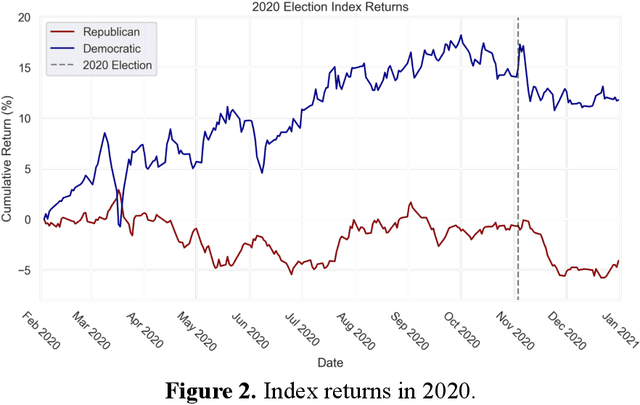

Given that Artificial Intelligence (AI) increasingly permeates our lives, it is critical that we systematically align AI objectives with the goals and values of humans. The human-AI alignment problem stems from the impracticality of explicitly specifying the rewards that AI models should receive for all the actions they could take in all relevant states of the world. One possible solution, then, is to leverage the capabilities of AI models to learn those rewards implicitly from a rich source of data describing human values in a wide range of contexts. The democratic policy-making process produces just such data by developing specific rules, flexible standards, interpretable guidelines, and generalizable precedents that synthesize citizens' preferences over potential actions taken in many states of the world. Therefore, computationally encoding public policies to make them legible to AI systems should be an important part of a socio-technical approach to the broader human-AI alignment puzzle. This Essay outlines research on AI that learn structures in policy data that can be leveraged for downstream tasks. As a demonstration of the ability of AI to comprehend policy, we provide a case study of an AI system that predicts the relevance of proposed legislation to any given publicly traded company and its likely effect on that company. We believe this represents the "comprehension" phase of AI and policy, but leveraging policy as a key source of human values to align AI requires "understanding" policy. Solving the alignment problem is crucial to ensuring that AI is beneficial both individually (to the person or group deploying the AI) and socially. As AI systems are given increasing responsibility in high-stakes contexts, integrating democratically-determined policy into those systems could align their behavior with human goals in a way that is responsive to a constantly evolving society.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge