Algorithms based on operator-averaged operators

Paper and Code

Dec 07, 2021

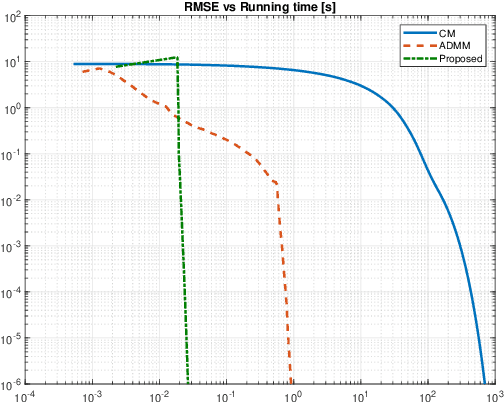

A class of algorithms comprised by certain semismooth Newton and active-set methods is able to solve convex minimization problems involving sparsity-inducing regularizers very rapidly; the speed advantage of methods from this class is a consequence of their ability to benefit from the sparsity of the corresponding solutions by solving smaller inner problems than conventional methods. The convergence properties of such conventional methods (e.g., the forward-backward and the proximal-Newton ones) can be studied very elegantly under the framework of iterations of scalar-averaged operators - this is not the case for the aforementioned class. However, we show in this work that by instead considering operator-averaged operators, one can indeed study methods of that class, and also to derive algorithms outside of it that may be more convenient to implement than existing ones. Additionally, we present experiments whose results suggest that methods based on operator-averaged operators achieve substantially faster convergence than conventional ones.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge