aiTPR: Attribute Interaction-Tensor Product Representation for Image Caption

Paper and Code

Jan 27, 2020

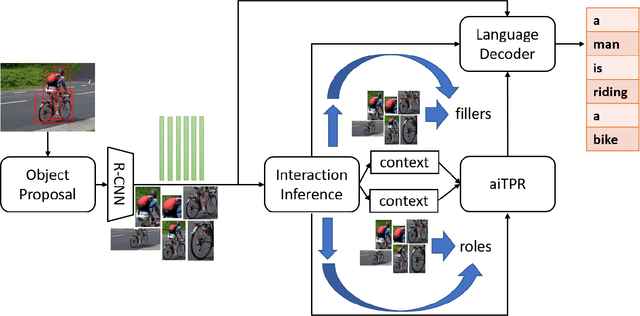

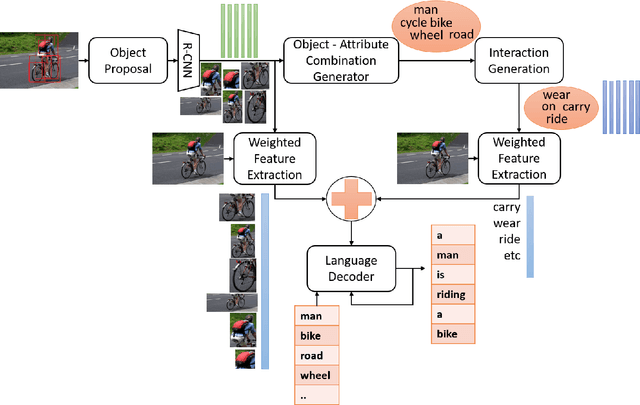

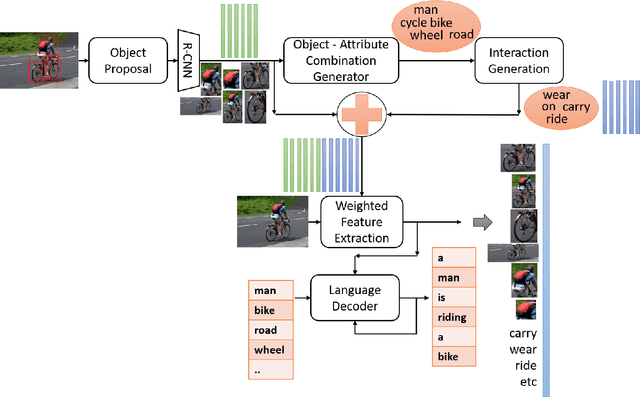

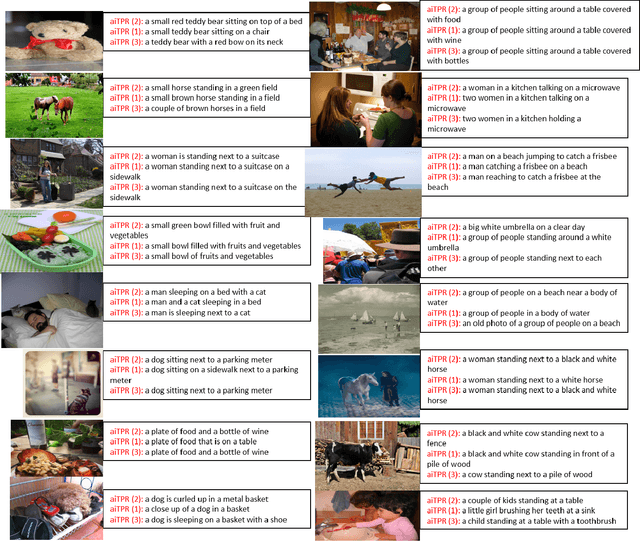

Region visual features enhance the generative capability of the machines based on features, however they lack proper interaction attentional perceptions and thus ends up with biased or uncorrelated sentences or pieces of misinformation. In this work, we propose Attribute Interaction-Tensor Product Representation (aiTPR) which is a convenient way of gathering more information through orthogonal combination and learning the interactions as physical entities (tensors) and improving the captions. Compared to previous works, where features are added up to undefined feature spaces, TPR helps in maintaining sanity in combinations and orthogonality helps in defining familiar spaces. We have introduced a new concept layer that defines the objects and also their interactions that can play a crucial role in determination of different descriptions. The interaction portions have contributed heavily for better caption quality and has out-performed different previous works on this domain and MSCOCO dataset. We introduced, for the first time, the notion of combining regional image features and abstracted interaction likelihood embedding for image captioning.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge