AI's Spatial Intelligence: Evaluating AI's Understanding of Spatial Transformations in PSVT:R and Augmented Reality

Paper and Code

Nov 19, 2024

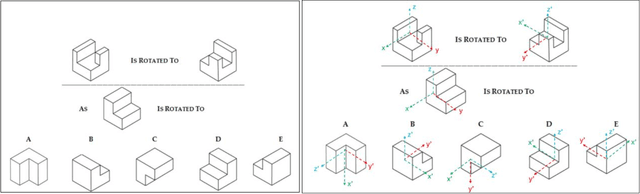

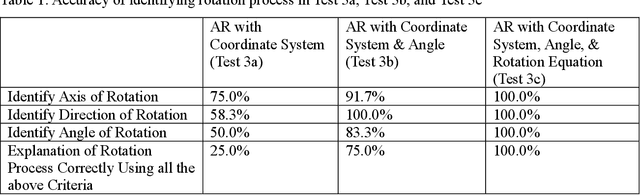

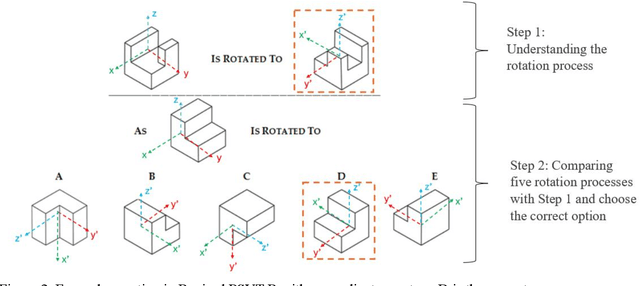

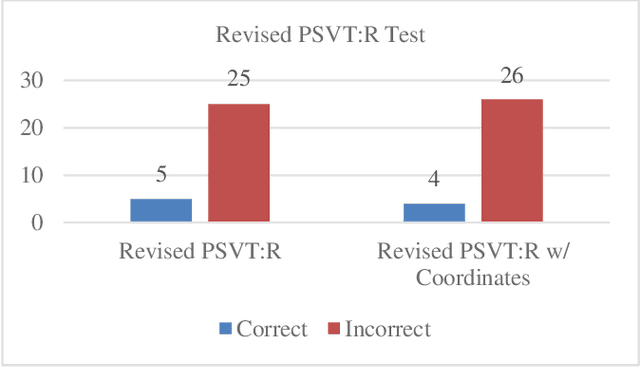

Spatial intelligence is important in Architecture, Construction, Science, Technology, Engineering, and Mathematics (STEM), and Medicine. Understanding three-dimensional (3D) spatial rotations can involve verbal descriptions and visual or interactive examples, illustrating how objects change orientation in 3D space. Recent studies show Artificial Intelligence (AI) with language and vision capabilities still face limitations in spatial reasoning. In this paper, we have studied generative AI's spatial capabilities of understanding rotations of objects utilizing its image and language processing features. We examined the spatial intelligence of the GPT-4 model with vision in understanding spatial rotation process with diagrams based on the Revised Purdue Spatial Visualization Test: Visualization of Rotations (Revised PSVT:R). Next, we incorporated a layer of coordinate system axes on Revised PSVT:R to study the variations in GPT-4's performance. We also examined GPT-4's understanding of 3D rotations in Augmented Reality (AR) scenes that visualize spatial rotations of an object in 3D space and observed increased accuracy of GPT-4's understanding of the rotations by adding supplementary textual information depicting the rotation process or mathematical representations of the rotation (e.g., matrices). The results indicate that while GPT-4 as a major current Generative AI model lacks the understanding of a spatial rotation process, it has the potential to understand the rotation process with additional information that can be provided by methods such as AR. By combining the potentials in spatial intelligence of AI with AR's interactive visualization abilities, we expect to offer enhanced guidance for students' spatial learning activities. Such spatial guidance can benefit understanding spatial transformations and additionally support processes like assembly, fabrication, and manufacturing.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge