Agentic Mixture-of-Workflows for Multi-Modal Chemical Search

Paper and Code

Feb 26, 2025

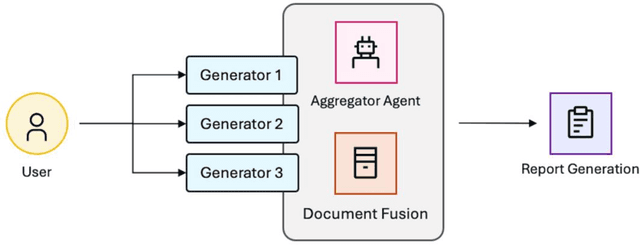

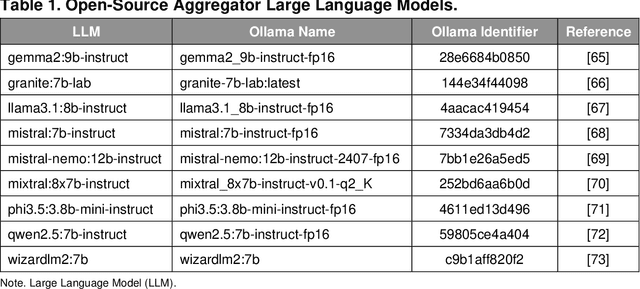

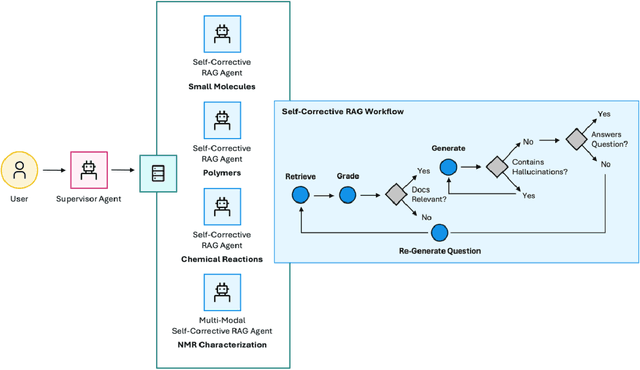

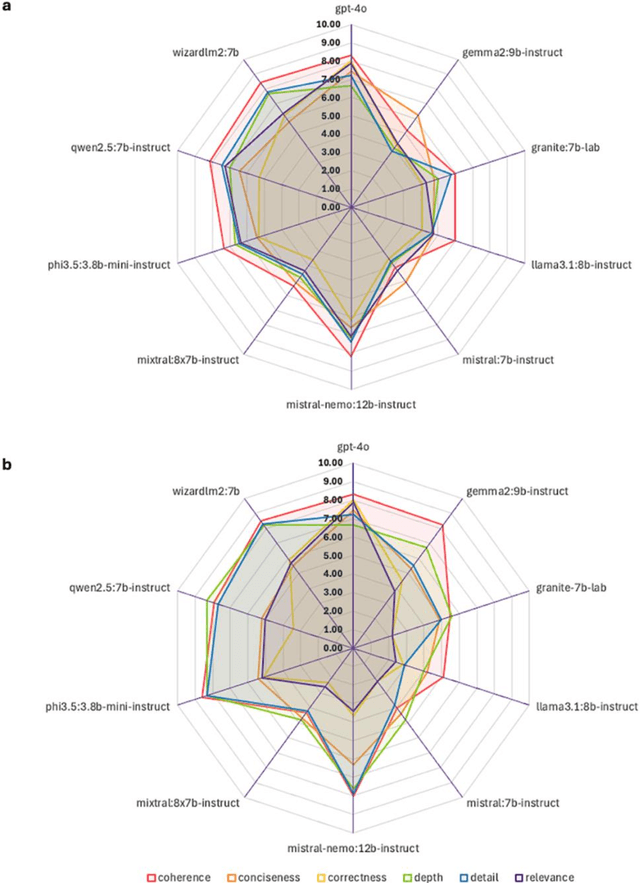

The vast and complex materials design space demands innovative strategies to integrate multidisciplinary scientific knowledge and optimize materials discovery. While large language models (LLMs) have demonstrated promising reasoning and automation capabilities across various domains, their application in materials science remains limited due to a lack of benchmarking standards and practical implementation frameworks. To address these challenges, we introduce Mixture-of-Workflows for Self-Corrective Retrieval-Augmented Generation (CRAG-MoW) - a novel paradigm that orchestrates multiple agentic workflows employing distinct CRAG strategies using open-source LLMs. Unlike prior approaches, CRAG-MoW synthesizes diverse outputs through an orchestration agent, enabling direct evaluation of multiple LLMs across the same problem domain. We benchmark CRAG-MoWs across small molecules, polymers, and chemical reactions, as well as multi-modal nuclear magnetic resonance (NMR) spectral retrieval. Our results demonstrate that CRAG-MoWs achieve performance comparable to GPT-4o while being preferred more frequently in comparative evaluations, highlighting the advantage of structured retrieval and multi-agent synthesis. By revealing performance variations across data types, CRAG-MoW provides a scalable, interpretable, and benchmark-driven approach to optimizing AI architectures for materials discovery. These insights are pivotal in addressing fundamental gaps in benchmarking LLMs and autonomous AI agents for scientific applications.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge