Affordance as general value function: A computational model

Paper and Code

Oct 27, 2020

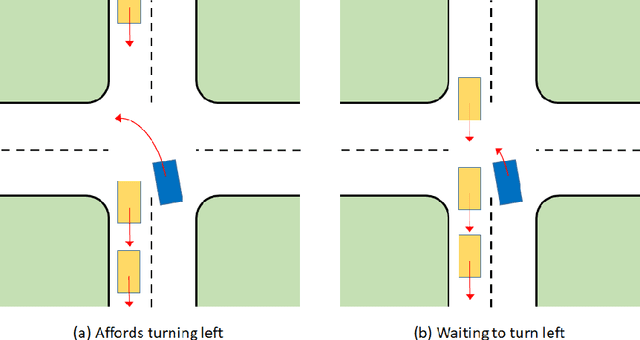

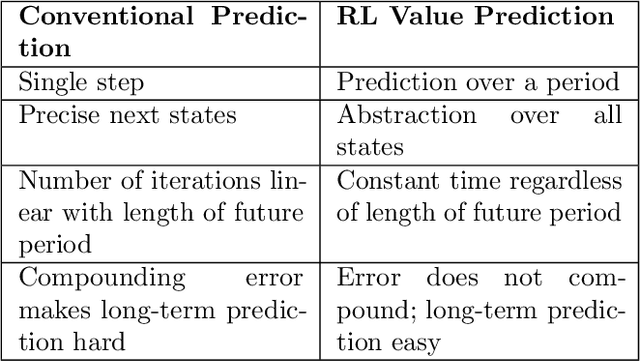

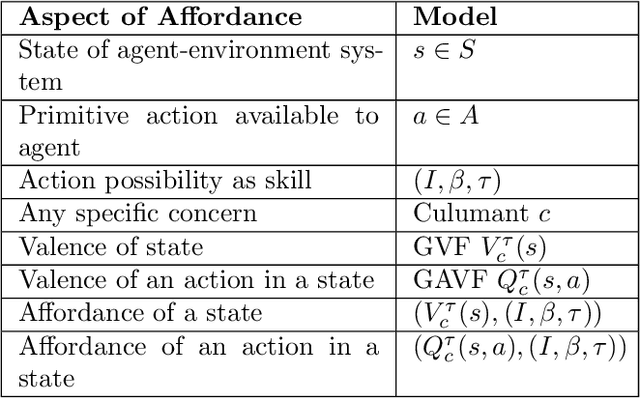

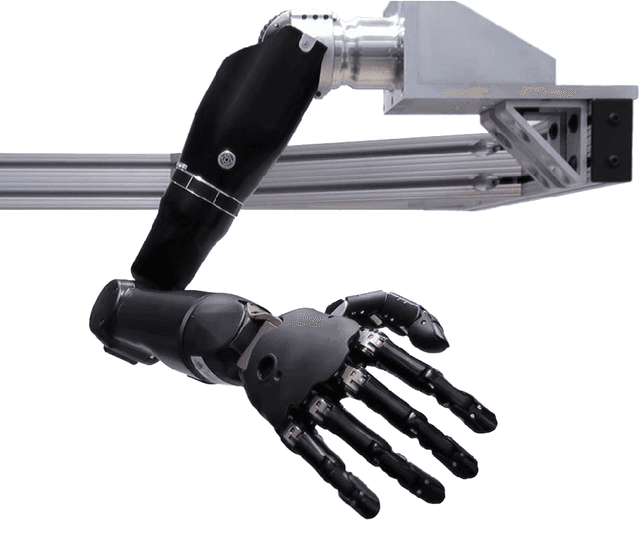

General value functions (GVFs) in the reinforcement learning (RL) literature are long-term predictive summaries of the outcomes of agents following specific policies in the environment. Affordances as perceived valences of action possibilities may be cast into predicted policy-relative goodness and modelled as GVFs. A systematic explication of this connection shows that GVFs and especially their deep learning embodiments (1) realize affordance prediction as a form of direct perception, (2) illuminate the fundamental connection between action and perception in affordance, and (3) offer a scalable way to learn affordances using RL methods. Through a comprehensive review of existing literature on recent successes of GVF applications in robotics, rehabilitation, industrial automation, and autonomous driving, we demonstrate that GVFs provide the right framework for learning affordances in real-world applications. In addition, we highlight a few new avenues of research opened up by the perspective of "affordance as GVF", including using GVFs for orchestrating complex behaviors.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge