Adversarial confidence and smoothness regularizations for scalable unsupervised discriminative learning

Paper and Code

Jun 04, 2018

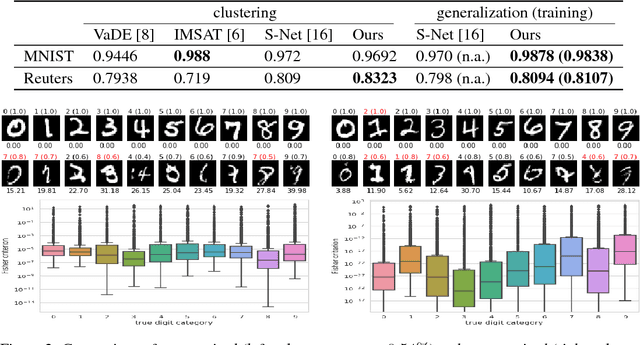

In this paper, we consider a generic probabilistic discriminative learner from the functional viewpoint and argue that, to make it learn well, it is necessary to constrain its hypothesis space to a set of non-trivial piecewise constant functions. To achieve this goal, we present a scalable unsupervised regularization framework. On the theoretical front, we prove that this framework is conducive to a factually confident and smooth discriminative model and connect it to an adversarial Taboo game, spectral clustering and virtual adversarial training. Experimentally, we take deep neural networks as our learners and demonstrate that, when trained under our framework in the unsupervised setting, they not only achieve state-of-the-art clustering results but also generalize well on both synthetic and real data.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge