Advancing Humor-Focused Sentiment Analysis through Improved Contextualized Embeddings and Model Architecture

Paper and Code

Nov 23, 2020

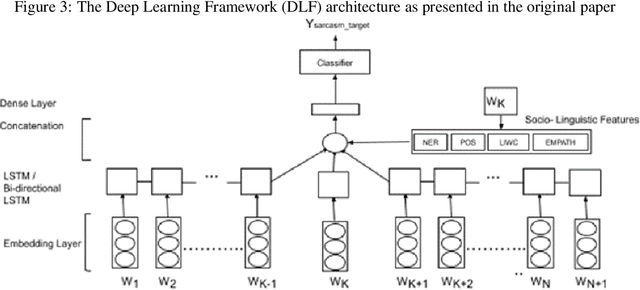

Humor is a natural and fundamental component of human interactions. When correctly applied, humor allows us to express thoughts and feelings conveniently and effectively, increasing interpersonal affection, likeability, and trust. However, understanding the use of humor is a computationally challenging task from the perspective of humor-aware language processing models. As language models become ubiquitous through virtual-assistants and IOT devices, the need to develop humor-aware models rises exponentially. To further improve the state-of-the-art capacity to perform this particular sentiment-analysis task we must explore models that incorporate contextualized and nonverbal elements in their design. Ideally, we seek architectures accepting non-verbal elements as additional embedded inputs to the model, alongside the original sentence-embedded input. This survey thus analyses the current state of research in techniques for improved contextualized embedding incorporating nonverbal information, as well as newly proposed deep architectures to improve context retention on top of popular word-embeddings methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge