Advances in exact Bayesian structure discovery in Bayesian networks

Paper and Code

Jun 27, 2012

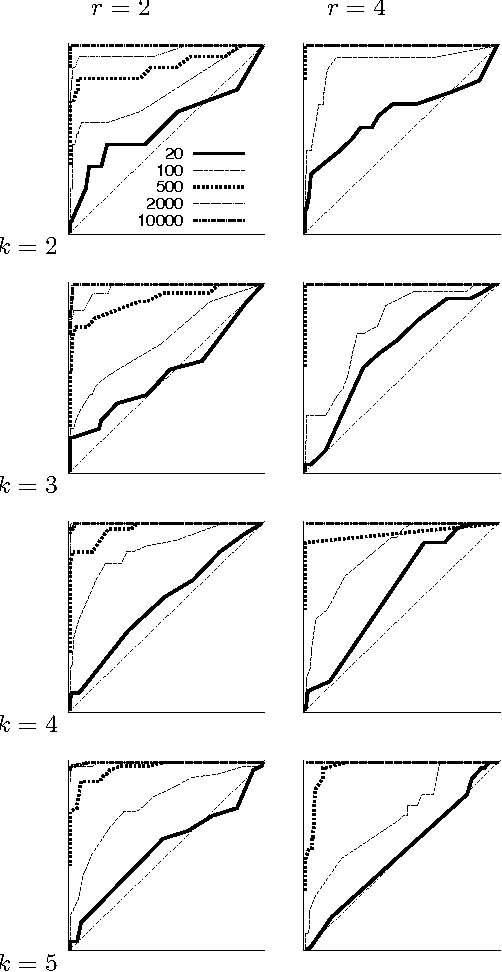

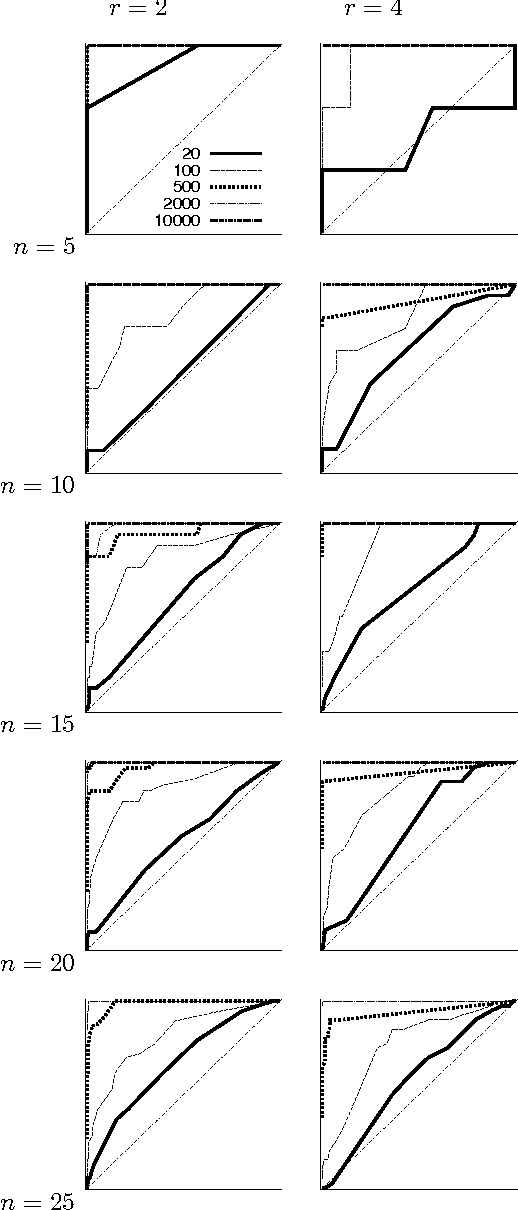

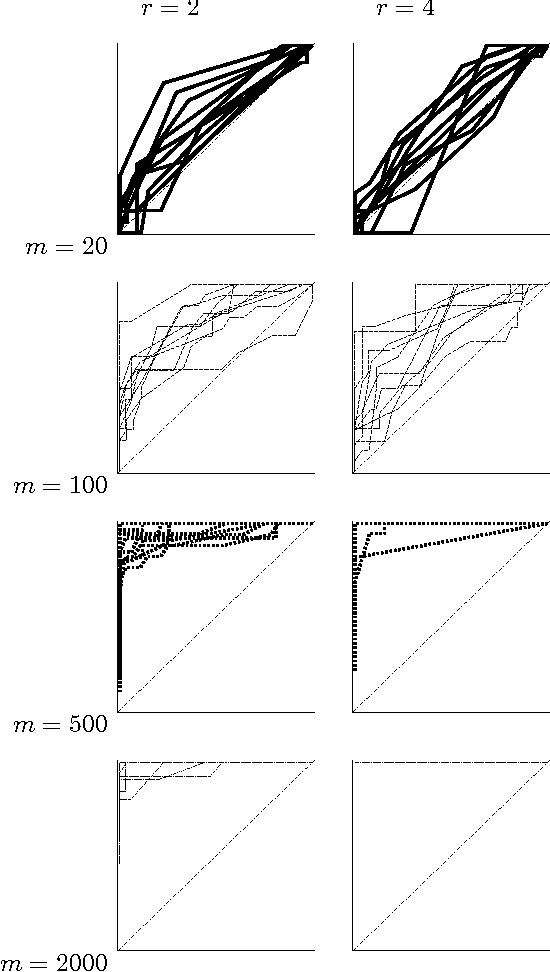

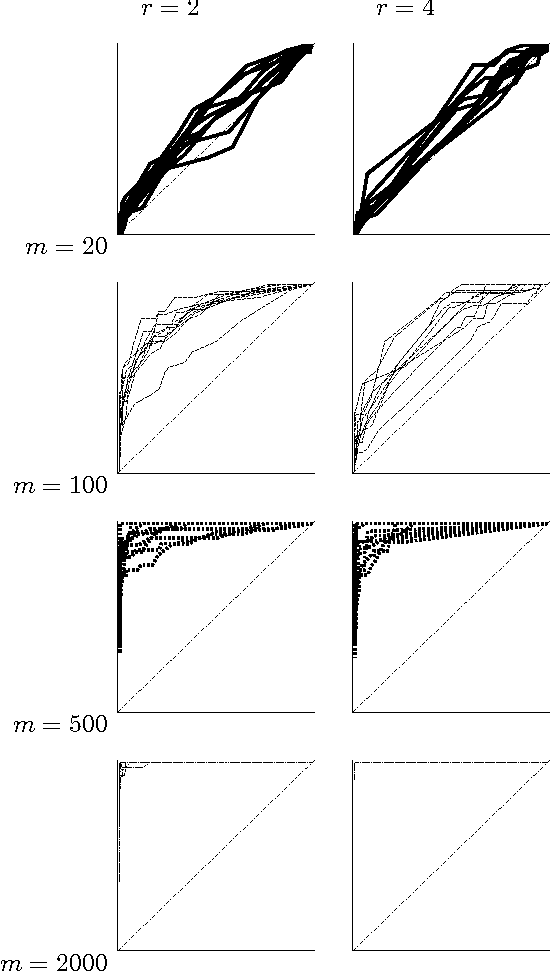

We consider a Bayesian method for learning the Bayesian network structure from complete data. Recently, Koivisto and Sood (2004) presented an algorithm that for any single edge computes its marginal posterior probability in O(n 2^n) time, where n is the number of attributes; the number of parents per attribute is bounded by a constant. In this paper we show that the posterior probabilities for all the n (n - 1) potential edges can be computed in O(n 2^n) total time. This result is achieved by a forward-backward technique and fast Moebius transform algorithms, which are of independent interest. The resulting speedup by a factor of about n^2 allows us to experimentally study the statistical power of learning moderate-size networks. We report results from a simulation study that covers data sets with 20 to 10,000 records over 5 to 25 discrete attributes

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge